The final frontier in science is brain theory. It holds the solution to robots and to devices that talk to us—and understand us!

Patom theory is a brain model that explains what brains do and it can be implemented on computers. In contrast, the popular models used in today’s Generative AI don’t hold up to scrutiny: their core design, to respond correctly to human input, often fails by generating answers that are untrue. They lack an accurate knowledge representation.

Prior to Patom theory, the best brain model assumed computations at its core. There is common acceptance that the brain is the organ of computation by many scientists and researchers. The computer science paradigm is that the brain is a computer and that neural networks process information. Recently, well known Francois Chollet, now ex-Google, said babies in the womb are just ‘computing statistics’. In other words, Chollet appears to believe that his system that uses deep learning is the same model the human brain uses, despite our knowledge in the cognitive sciences that it is NOT similar. So now the brain is a deep learning network!? Please, let’s make it stop!

Alternatively, we can use my latest book, “How to Solve AI with Our Brain: The Final Frontier in Science” to explain why many propose such analogies and then why the better analogy is the brain as a pattern-matcher. The book link is here for Amazon.

Why choose an analogy? Our brain selects the most obvious answer, especially when there isn’t anything else! An analogy just shares a common pattern in a brain. A computer has been the only choice for a brain analogy because steam engines and holograms are outdated.

In Patom theory, brains store and decompose patterns into their atomic parts — until no more decomposition is possible. If a pattern needs to be decomposed further, it splits into parts until each part is only used once. Pattern atoms by definition need no search engine, as there is nothing to search for when there is no duplication of patterns allowed!

Pattern atoms create a situation where common patterns, like an analogy, are connected to more than one pattern.

We need to think differently about how the brain works to solve the hard, long tail problems that have persisted for ~70 years. Those problems, dubbed ‘hallucinations,’ are errors that remain unsolved even with today’s computing power.

Humans have pondered how brains work since the 1950s or so to replicate for AI. The best developing technology at the time was the digital computer. Under scrutiny, it comes up wanting as a possible brain model, but whether it makes sense or not, calling the brain the organ of computation brings with it the related associations - concepts like memory use, encoding, ALU and registers. Data in particular is a terrible analogy for human memory.

And as our AI experts today start with the computational model, the differences between brains and computers show up as limitations in AI.

The evolution of AI has paralleled the improvements in computers, but now is a good time to refocus on AI with superior models of cognition (how our brain works) to enable rapid replication of human brains. Emulating human brains is the first goal of AI, or at least it should be.

Patom theory is the brain theory I developed that explains a large number of observations of brain functions. It has no programming, reasoning, mind or information but it can model our capabilities and track them through the process of evolution.

Why aren’t brains computers?

Computers tend to fail catastrophically when damaged. Human and animal brains still operate with somewhat predictable deficits through localized damage. Computers are designed with memory that can store any data, defined by its type, while the idea that a brain encodes data begs the question, “How?”

Why can regions of human brain look anatomically identical but perform highly specific functions? The popular approach is to assume that “the program” is different in each region, causing different functions, but a better explanation is that there is no processing going on. The idea that regions store, match and use patterns is sufficient for a thought experiment to model human capabilities as an incremental evolution from animal brains. It also is consistent with observations of brains.

A better brain model enables us to throw a number of concepts into the fire while improving our ability to model human brains. We can burn up concepts like ‘intelligence’ and ‘reasoning’ along the way, since there are better ways to achieve that outcome through pattern use. AI, of course, has the word intelligence in it that often makes people think AI is about being smart.

Disruptive science can be annoying for the current generation because they may have only been taught one way to solve a problem, but our goal is to solve it, not protect older ideas that aren’t solving the problems we face. Nobody ever proved that computers are like brains, but we tend to lock down scientific models like that, regardless of their lack of efficacy.

Human brains and language evolved from animal brains

Animals share the ‘hard’ part of AI, while the ‘easy’ parts of AI align somewhat with computers as described by Moravec’s paradox that reads:

“it is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility”

And yet in the image below, a dog effortlessly watches a bouncing ball, moves into position to catch it in its mouth, and avoids the uneven ground and trees. Motion and senses are easy for brains from fish through the evolutionary pathway to humans.

Today’s AI and the ‘hopium’ for AGI

In contrast to today’s investments in artificial neural networks and corpus linguistics (the use of a corpus of information, such as the internet as a training resource), human emulation requires a different approach like that adopted in our brains.

Researchers have struggled to mimic easy features of brains such as sensory recognition and motor control because the approaches are alien to each other. Brains are unlike computers. Computers were designed to emulate humans performing arithmetic, not to emulate the human itself.

Brains like in the dog above specialize in dealing with massive ambiguity (multiple perceptions representing things) and resolving them in context (recognizing a ball as it moves and is hidden by other objects through many sensory cues - smell, motion, sound, balance, and others). Humans extend that in our brain.

By recognizing and tracking objects, brains recognize individual senses, multiple senses, and the compositions in particular contexts - where it happened, who was there, and what else was present. These are simple ‘water-hole’ skills where animals recognize whether to fight or flight situations necessary for survival around a water hole containing predators and prey.

Human brains build on this as our brains evolved with these skills. We can tell our clan to avoid the water hole because of lions, without even visiting it. Language is an amazing survival benefit.

Human Language

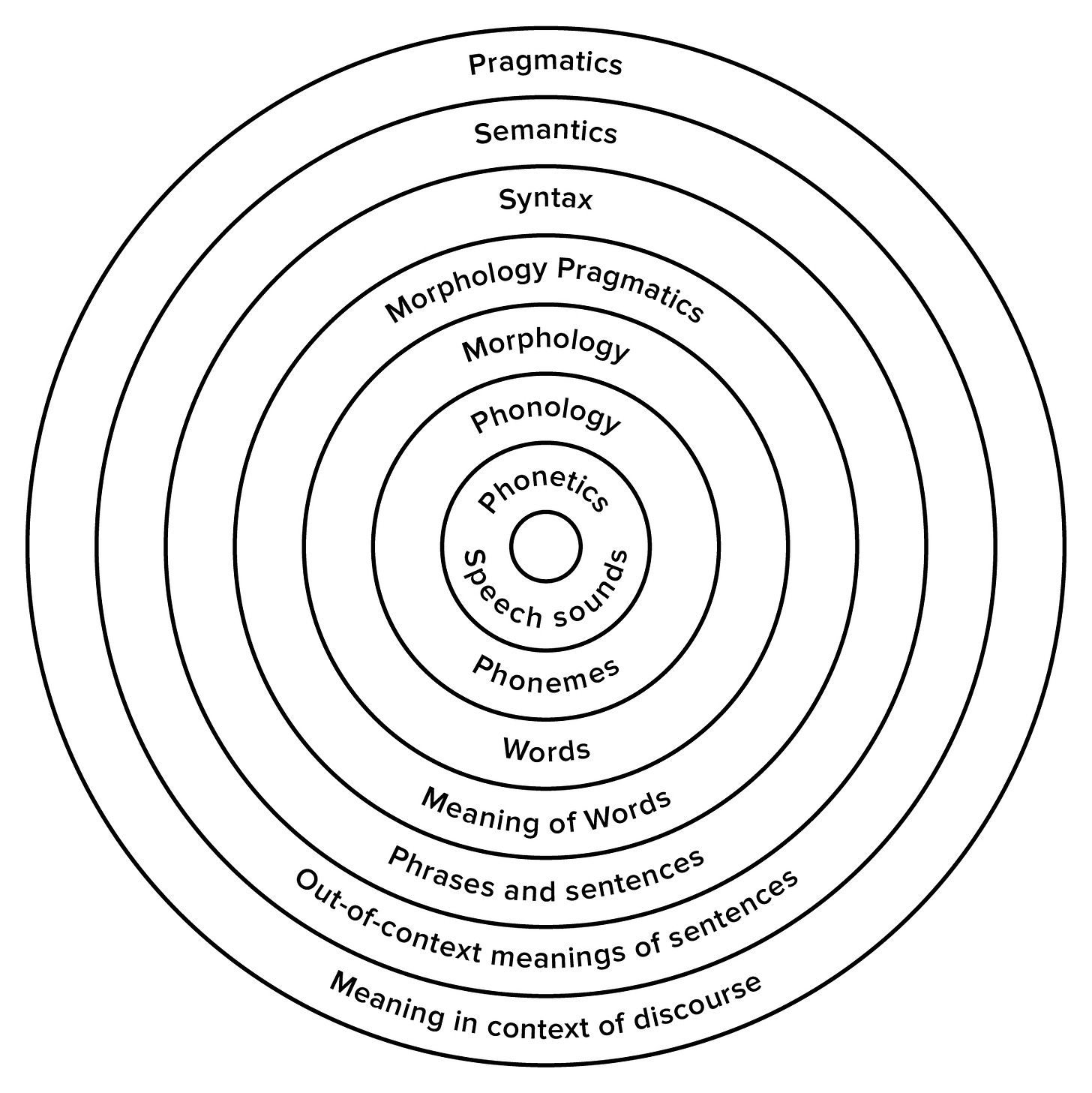

In about 100 sequential neuron activations, our brain selects actions from sensory input. That’s fast - a fraction of a second! But it also can be analyzed as a number of steps to achieve accurate recognition as in this diagram below:

Science is different from engineering

Science has been held up by approaching the model of language above as separate skills, able to be solved independently. They can’t. You usually can’t work out what sentences mean without knowing the context it is said in.

Syntax with rules, as a standalone focus of linguistics, was proposed in 1956 and remains unsolved because it is unsolvable without including semantics and context.

Instead, an integrated model is necessary to deal with the real world. That’s how the human brain works. Emulating with other methods hasn’t worked. Animal’s brains appear to do this with hierarchical patterns to track context.

Once we put our scientific and engineering weight against the challenges of human emulation, we will quickly change the world for the better as devices start to work with us.

But first we need to reduce the ongoing use of electricity and money hoping to get generative-AI to work as promised by their developers and invest about 10% of that capital in brain emulation.

What’s Next?

My substack will be used to centralize discussions around AI and in particular, the scientific model of Patom theory that explains how brains work. Today’s introduction explains the movement from an engineering focus on AI to a scientific one grounded in cognitive science. Human capabilities starts the dialog with many topics to come.

While the book format is the best medium to explain the big picture, we can cover the specific questions that follow on as you begin to view AI through the lens of brain science in this substack. By educating and improving the model over time, we can emulate capabilities of human brains to create tools that exploit our own preferences for communications, such as with a human language!