Explaining Brains (Part 1)

with Pattern Theory and why it IS NOT computational

I have been developing Patom theory since 1983, actually as long as I can remember. But at the end of 1999 I recorded a program to explain the difference between a brain and a computer here: “Our Brain. the Patom Matcher (click). Brains drive humans; and computers drive our devices and today’s statistics based AI. Today you can read what the difference is as the first instalment of the basics of Patom brain theory.

General purpose computers have a logic unit and generic memory. Brains have specific regions based on nature. This evolutionary setup was handed down by our parents. Nurture is what we learn and store through experience.

I write this article as a response to a couple of my internet buddies and friends (AGIHound and the scientist and entrepreneur Lloyd Watts) who have said that, while my theory may be of interest, they don’t understand it enough! Therefore, they cannot advocate for it! Ouch, my writing needs improvement! So let’s address that.

Starting Point for a Brain (Nature and Nurture)

When you jump and freeze at the sight of a little green tree snake, as I once did, it wasn’t a learned response. I was never trained to be afraid of little green snakes and yet my heart was pounding and I froze in place. That seems like nature - a response my body just made that likely could have saved my life in a different situation.

Nature is what we are given through our genes. I have 5 fingers on each hand and 5 toes on each foot. I have 2 eyes in my head, and my skin has temperature and touch sensors everywhere. It’s natural and allows me to learn how to eat food with a knife and fork and speak a language.

Our brain similarly has nature create its basic anatomy and connections between regions in our cortex, for example, that are sufficient to enable us to learn. That is more nature!

I have always been aware of my environment from some perspective. I experience the world as some kind of cinema in which all my senses position me with signs from my eyes and sounds from my ears, plus balance, limb position and more in my awareness. We call this nature: consciousness.

There are many things I have learned. That’s nurture. I learned English as a child and have continued learning it to today, using language itself to understand new concepts as they are taught to me in English or by demonstration. Children have never been born with some language - the always follow a path from not speaking to speaking after birth.

This idea of nature providing language and memories is popular in science fiction. In the movie “The Fifth Element,” the cells in Milla Jovovich’s character’s arm were sufficient to restore its body - nature - but also some archaic language (what?!, not nurture?) where the language was presumably encoded into the DNA. That’s not how humans work! If it did, we wouldn’t have the huge diversity of languages around the world in which the language taught is the driver for the languages spoken.

The family’s spoken language is the cause for the child’s spoken language in almost all cases.

Nature gifts us our body and its architecture. Experience which is ‘context’ or if you are a linguist it’s called pragmatics, provides nurture. Language, gymnastics, how to ride a bike and how to eat at a table with utensils is ‘nurture.’

What is nature?

Nature provides a human body with all that is needed to learn a language. Its creation is non-trivial, creating our body and organs. The complexity is immense, with human experts explaining most aspects of our body. The weakest explanation always seems to come from how our nervous system works. That is the goal of Patom theory — to explain how our brain controls the nervous system.

The human brain’s function is the final frontier in science because models of it outside of Patom theory are inconclusive.

What is Computation?

In simplified terms, general-purpose computers have a logic unit and memory. To perform some function, defined memory locations (registers) are allocated some encoded model and then the logic unit is told to execute some pre-programmed instruction that changes the memory as defined by the architecture.

This is formal science in which humans define the rules.

The memory can be reused as needed to store any encoded data desired.

This means that by inspection of any memory, its meaning cannot be determined without finding out what the encoded representation means.

With the invention of the internet, crawlers, which are programs that inspect memory at discovered sites, can index the memory with the help of an algorithm to efficiently search for it later, when requested.

The brain is not like this because it stores memories where they are matched, not in predefined, arbitrarily encoded storage locations.

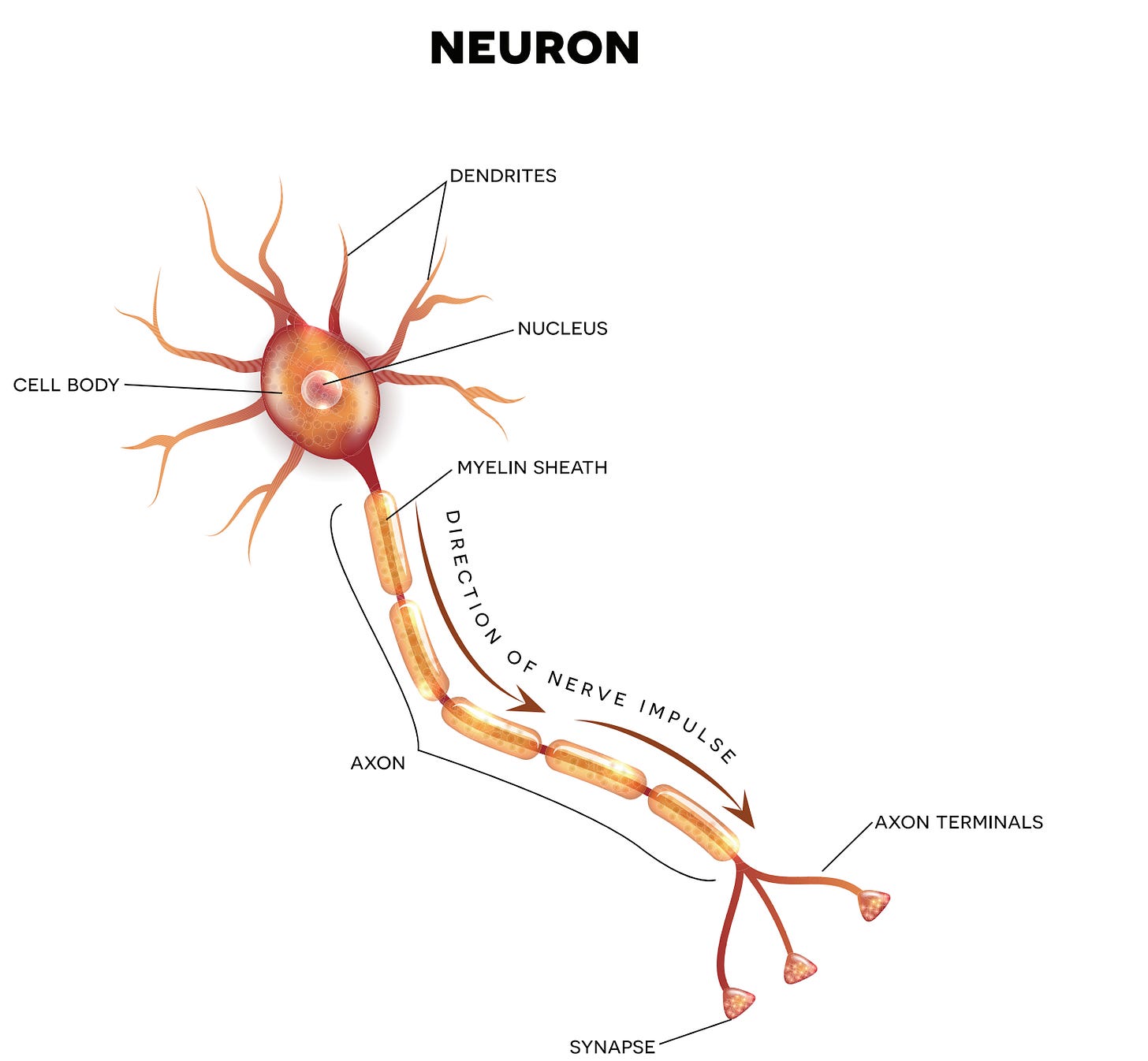

What do neurons do?

Today’s dominant theory is that neurons can be considered to be statistical processors. It’s the application of a computational model. It’s been the popular model since the 1940s with strong alignments between computer circuits or logic gates that take inputs to produce outputs. The McCulloch and Pitts “A logical calculus of the ideas immanent in nervous activity” from 1943 lays out the model clearly.

They receive inputs with weighted values and signal responses with weighted outputs. This model is at the heart of the Deep Learning paradigm that has made Geoffrey Hinton, the Nobel laureate in physics, very famous and that is inside today’s Large Language Models (LLMs) and other generative AI.

Setting up a network of these processing neurons, inputs in a layered model of neurons can be trained using techniques like gradient descent, but these statistical engines aren’t human-like in their function as you will see shortly.

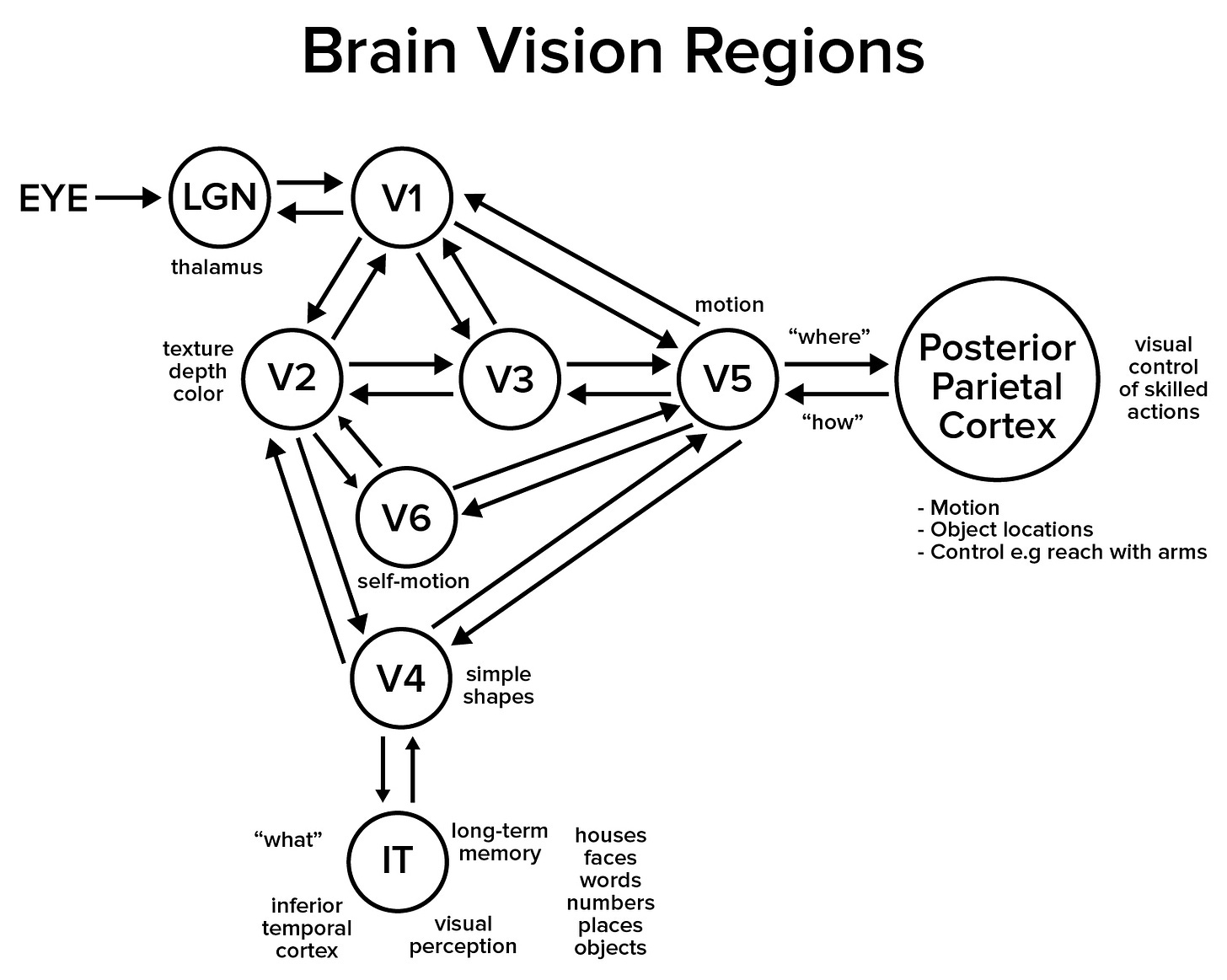

The Patom model is quite different. V1 in the diagram below shows the bidirectional links to regions V2, V3 and V5. Each region is made of neurons in layers, and those layers include forward projections and backward projections. Instead of thinking of a neuron as a computational circuit, Patom theory models it as a pattern matcher, signalling only when a match is made.

In the diagram above, each region operates with its neurons in similar ways, projecting forward with neurons and backwards with neurons. These regions provide the building blocks of a human brain.

Regions and their constituents (cortical columns) are the brain’s building blocks made up of neurons performing particular functions, not neurons acting like processing units acting on stored, encoded or transmitted data.

What’s inside a region?

Notice that the storage of patterns takes place inside the region, not with some kind of encoding and transmission. All that is being sent is a message indicating that some particular pattern is matched. Now, when a pattern is matched, a set of neurons activate, sending a message to the next layers.

We aren’t talking about the interactions of regions yet, but those regions can signal back to confirm that the patterns make sense in the current context, or that they do not. This is important in theory because top-down and bottom-up recognition have their place in ensuring accuracy and recognizing incomplete patterns.

In order to deal with the complexities of the world and retain an accurate model, the bidirectional nature of these regions adds a useful dimension.

By storing sensory information, where matched in the sensory input, and then combining them together at a higher level, very complex environments can be recognized - like a world model. The details of this will come up in later posts.

What does a Patom do?

A pattern-atom or Patom provides a mechanism to store, match and use patterns. These patterns aren’t simplistic units, but multi-layered ones in a hierarchy from senses to contextual memories to contextual sequences. Let’s just look at a single layer in today’s introduction.

What does a pattern do in visual memory?

Visual memory in, say, the occipital lobe receives a set of signals from the eye via the optic nerve at region V1. These regions can be thought of as Patom storage, storing relevant patterns. They receive signals from the optic nerves that come from the eye’s neurons. The conversion of visual input by rods (low light) and cones (bright light with color) is done in the human eye.

The inputs are highly constrained by the outputs from the eyes. As patterns change over time, the V1 region will stop matching one set of patterns and start matching others. The theory allows patterns to be sets of active inputs, sequences of sets or combinations of both. Sets of active patterns are like a snapshot in which a set of inputs are active and sequences enable changes over time.

With the visual V1 region operating, its outputs are visual patterns, unconstrained by anything other than responses directly related to vision only. Clarifications from context using the bidirectional links can constrain vision to comply with context by rejecting ambiguous patterns to what makes sense.

Conclusion

In today’s introduction, Patom theory is introduced.

I started with nature versus nurture. There is a lot of innateness from nature that enables the human body to operate. Given all these amazing features, the brain is able to build with nurture, automatically taking inputs and learning to recognize things, many of which would not be possible without the body’s architecture.

The difference between the popular model of neurons from the dawn of computers in the 1940s was then contrasted with Patom theory that takes a more holistic view.

Patterns that come from the senses are recognized and signaled, confirmed and denied by other regions upstream.

Only one region, visual input, is considered with its interaction with the neurons in the eyes today, and there is more to come to explain the non-computational model that Patom theory represents. Stay tuned for Part 2…….

Do you want to get more involved?

If you want to get involved with our upcoming project to enable a gamified language-learning system, the site to track progress is here (click). You can keep informed by adding your email to the contact list on that site.

Do you want to read more?

If you want to read about the application of brain science to the problems of AI, you can read my latest book, “How to Solve AI with Our Brain: The Final Frontier in Science” to explain the facets of brain science we can apply and why the best analogy today is the brain as a pattern-matcher. The book link is here on Amazon in the US.

In the cover design below, you can see the human brain incorporating its senses, such as the eyes. The brain’s use is being applied to a human-like robot who is being improved with brain science towards full human emulation in looks and capability.