Last time I identified the problem. I wrote:

AI is being held up by standard ideas used in computer science that are ineffective for human brain emulation. The main problem is that the representation used in computers is alien to that of a brain but is forced in nonetheless. We need to move beyond 1950s data models! … But AI evolved from digital computer representations; the representations that ushered in the information age.

Let’s progress the discussion by comparing how a digital computer represents information with Patom brain theory.

As usual, I will use hotlinks to online references for further reading.

Representation: 0 and 1 (processing model?)

We can represent binary code with ‘0’ and ‘1.’ Using base 2, we can string together binary digits to form larger numbers, such as with hexadecimal ‘F’ being binary 1111 (15 decimal). This approach is effective for storing numbers and characters to replicate human-computer skills from the days before digital computers, but digital computers emulate the work of human computers, not their sensory and motor systems.

Another approach is combinatorial as adopted in Patom theory. The active value ‘1’ represents the cumulative total of all downstream patterns where bottom-up patterns start with inputs. A ‘1’ is validated by upstream patterns which are top-down patterns that are consistent across all inputs.

This model doesn’t transfer between machines as easily as binary codes, since the entire network is involved, but once established it can solve problems that have been unsolved for many decades.

When you recognize your mother as an object, the upstream patterns ground her in time and space in your experiences of events. The downstream patterns include the recognition of her face and body, how she sounds when speaking, what her perfume smells like when you kiss her, and so on. These patterns can be refined of course, since your young mother and older mother are both downstream, while particular situations upstream will be associated with the appropriate images.

The challenge in AI has always been the analysis of brains while forcing in the computational paradigm! Seeing past our current stored patterns is hard for most people. Why? We tend to see what we expect because our brain finds patterns at all levels of our experiences.

Neurons aren’t computers or statistical engines

There are a couple of ways to represent the function of a neuron:

A statistical device that adds up its positive and negative inputs and, if its threshold is reached, it sends a statistical response to downstream neurons. (The popular model ~2025 based on computation)

A symbolic device that fires when a pattern is matched. (Patom Theory/PT)

Neurons don’t process

Since at least the 1950s there has been little progress in creating machines that emulate animal skills like integrated sensory recognition and motor control using artificial forms of these (processing) neurons. It is somewhat awkward to align neurons with the arithmetic logic unit (ALU) that is central to a digital computer. Treating them with statistical inputs and statistical outputs makes them a kind of complex logic gate. They simply add up positive (excitatory) and negative (inhibitive) inputs and only fire when over threshold.

But what magic would set these values when learning? How would a connection learn how other connections are set and adjust their weights in combination, as needed? How would programs be written and by what to emulate the processing paradigm?

Others hypothesize that not only is the neuron a processing machine, so are their dendrites and more, they are influenced by the quantum realm. I’ll factor that out for now on the basis that there is no obvious way these little processors could be taught and, in any case, Ockham’s Razor calls for that hypothetical model to be excluded without persuasive evidence.

In a computer, information must have a look-up step using search. In PT, the pattern is associated with all its learned properties using links.

Today, evidence suggesting that neurons are processing machines is lacking.

Neurons signal when patterns are matched

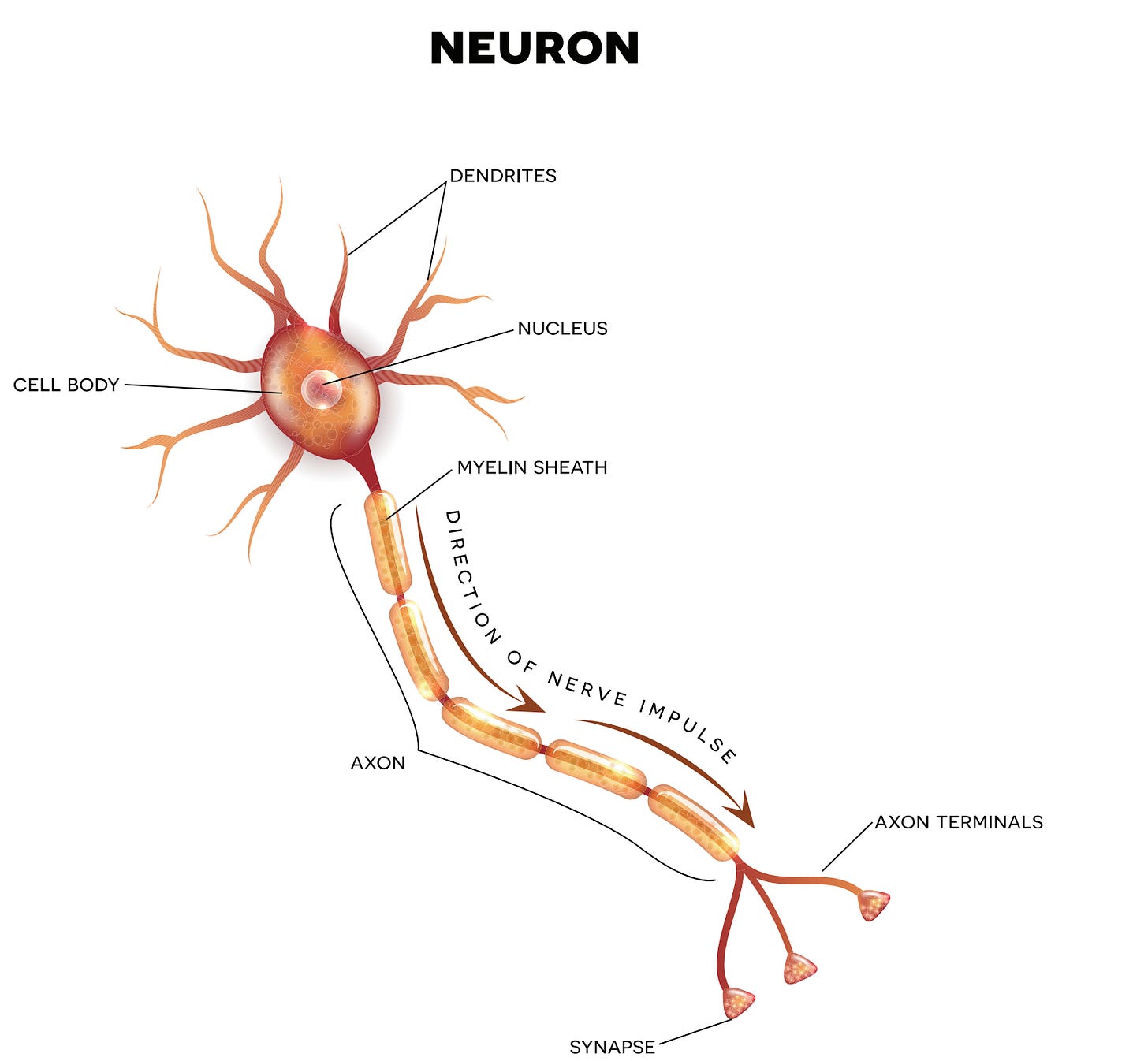

In the figure below, a single neuron is shown with its inputs (dendrites) determining if the cell should fire by an electrochemical process that releases chemicals at their synapses.

How an ALU (arithmetic logic unit) could be assembled inside a brain appears an unsolvable problem, of course. A human can map out a design, but to do so from the perspective of a neuron inside a brain with target connections being unknown seems unlikely.

In Patom theory, one or more neurons signal when they match a pattern. The combination of patterns identifies more complex patterns such as multi-sensory objects. Validation is performed top-down as higher-level patterns validate lower-level ones and bottom-up as new inputs are received.

Finding a single model that doesn’t rely on processing per se is better, using something like pattern matching in brain regions (or columns or other units) that preserves the patterns across regions to retain context (linking).

Digital Information versus Patoms

Digital information can be thought of as a set of binary digits or bits. In a computer, bits are the building blocks of information with data structures enabling combinations of types and classes to be defined with arbitrary levels of complexity. The cost of such classes in computing is the intervention to correctly define such structures manually on an ad-hoc basis. Encoding as in a computer is currently done best by a human setting a system up to model a particular entity, such as in an ER (Entity Relationship) model.

In contrast, a Patom can represent any pattern as a single signal because the reverse of that pattern retains the entire collection of patterns, and they, in turn, retain their reverse patterns and so on and so forth. It’s an accurate way to represent a vast amount of possible interpretations conveyed with a single signal due to its architecture.

Lookup versus association

In a computer, the first lookup converts the encoded format, such as ASCII, into its associated character representation. And another lookup encodes more complex entities, such as a Human that is made up of a name, address, phone number, and so on. Today’s computer model is central to our way of thinking of computer representation and is a major impediment to human emulation.

An alternative model is the association model. If an element is recognized, it is signaled uniquely. Unique signaling means patterns are only stored once and, therefore, search is not needed. This is the concept of atomic patterns in which each pattern cannot be broken down further, just as elements on the periodic table are indivisible in chemistry.

The signal doesn’t encode the information but instead is accessible through a reverse link to it.

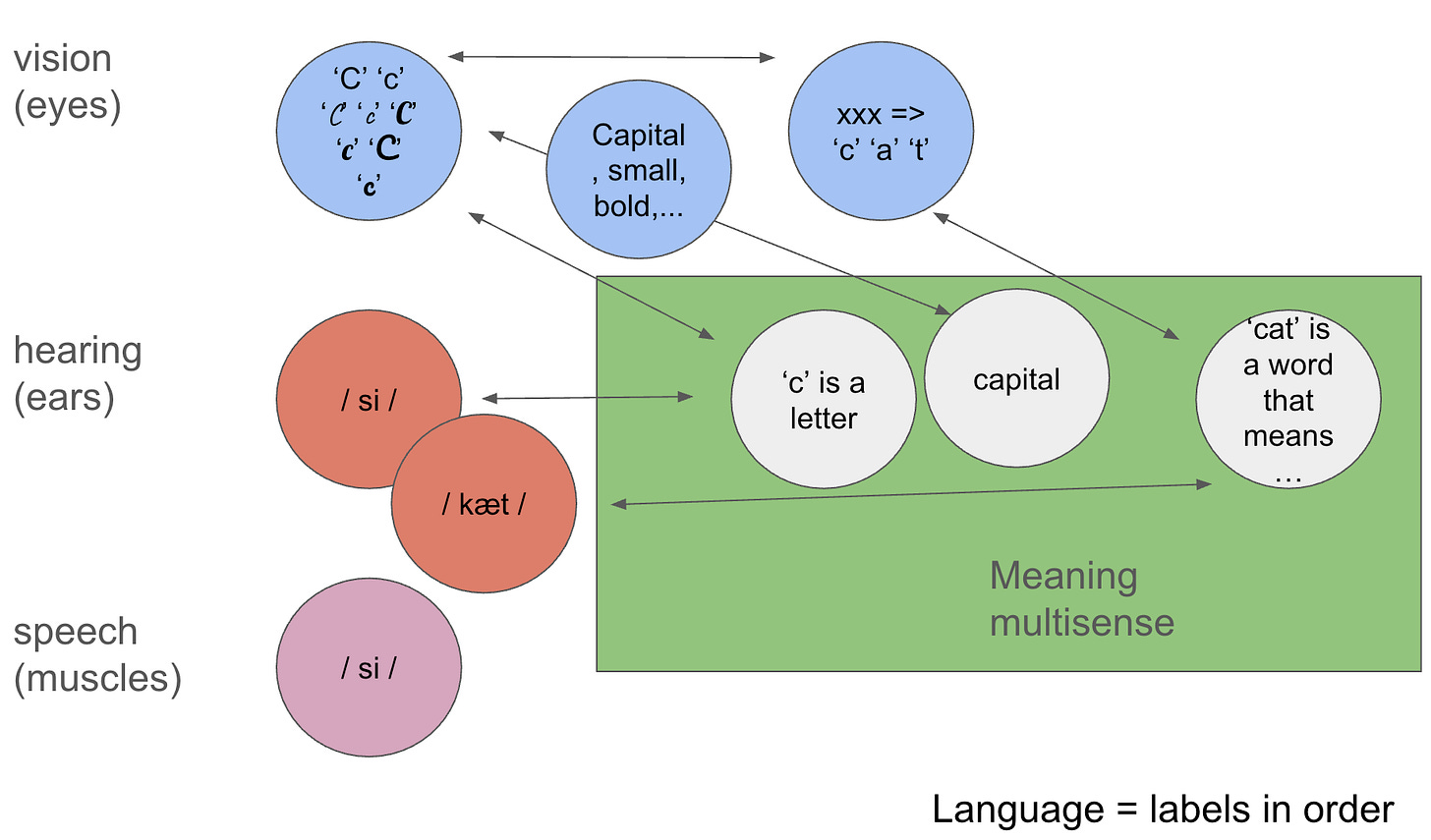

The following diagram illustrates how a signal can represent a single character — manipulated through its activation, but never encoded. This mechanism can be seen in human brains as recognition is performed linearly from sensory recognition through to complex multisensory recognition in under 100 sequential neural activations.

In Figure 1 a common human skill can be described. There is a category of symbols called letters. An example of that category is the letter ‘c.’ But to draw the letter visually, there are an astronomical numbers of ways to draw it—perhaps think of descriptions like font, size, italics/bold/strikethrough, text color, highlight color, capital/small and so on.

These features may be important, or may not be. Cat, cat, CAT and cAt may all mean the same thing, or perhaps some emphasis is placed on a particular word form, or perhaps there was a mistake in typing, or perhaps the language requires capitalization. Given only a single word, rather than sentences in context, we cannot be sure.

We can recognize characters sometimes only by the rest of the word. And sometimes we can only recognize the word by the rest of the sentence.

These properties of human codes, like language, have proven to be maddening to scientists and engineers who attempt to break up the end-to-end recognition into complete units. Recognizing parsing was considered impossible (NP hard) since the 1980s due to an analysis of the way parsing was done.

But humans seem to effortlessly recognize their native languages without any obvious strain, so whatever the brain does with its comparatively slow mechanisms is sufficient.

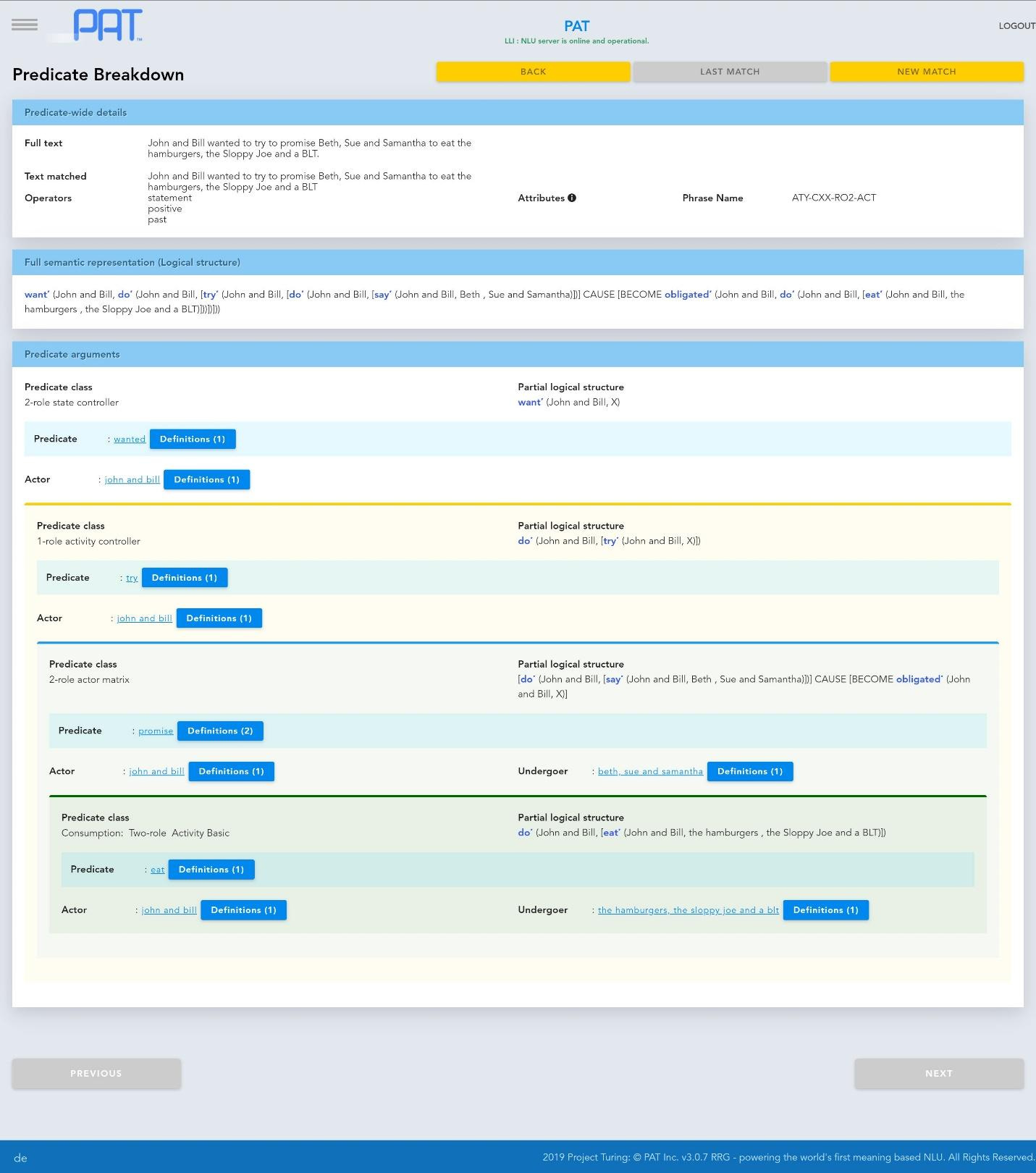

In Figure 2 below, the breakdown of a sentence in real time was performed in which a large number of possible interpretations are excluded by higher levels of analysis in context, showing a solution to the parsing problem running on a digital computer is possible (and working today).

Language steps

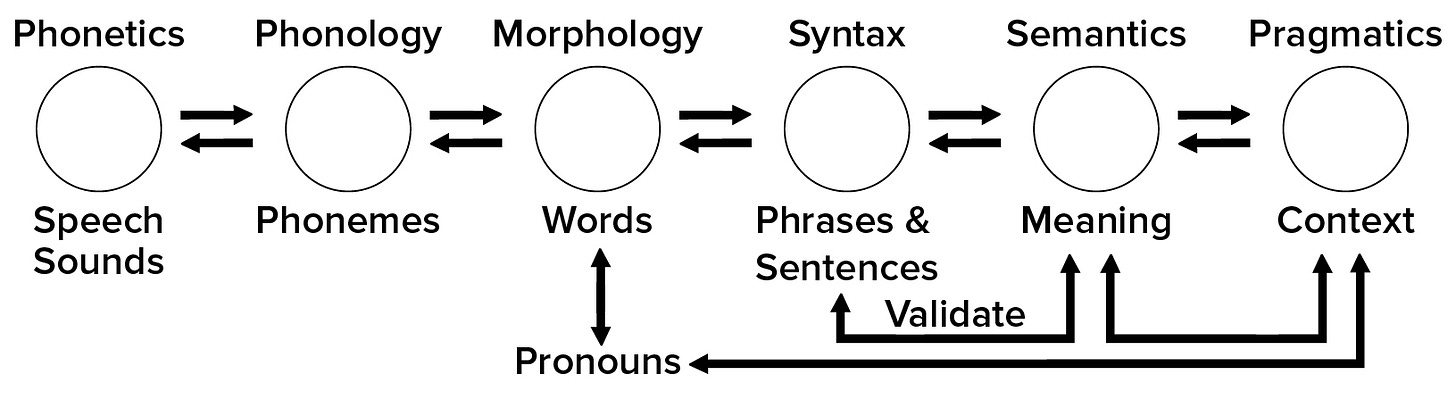

We can explore the theory of what our brain can do with human language. A simplified model of our rapid recognition from the sounds to its meaning in context can be seen in Figure 3 below:

How does this happen in real-time and with our accurate recognition of minute distinctions in expression? Note that this is only a component of a conversation, not the whole thing.

There are patterns needed to convert sound to the phones of our language using the ear’s cochlea to convert sound waves into neural signals through the interaction of hair cells and stereocilia. These patterns cannot be properly resolved without recognizing the meaning of phrases collected by syntactic phrases and resolved by semantic validations (in semantics, predicates determine their arguments). The resolved predicates still need to make sense in context.

There are a number of technical simplifications possible in the recognition of syntax that align with PT, such as the removal of Role and Reference Grammar (RRG) operators (for another time to explain in more detail as its own topic).

There are typically not many possible interpretations at each level to be addressed, so provided each level is represented it should be reasonable to fully emulate human language skills on machine.

Other Brain Regions

In the human vision system, there are different regions for color and motion. Damage to the visual motion region (V5) results in the patient being unable to see motion normally, instead seeing an object like a moving car, then it is closer and then closer again. The deficit is highly dangerous as a result. The motion of the individual object is recognized in a particular region, V5, even though the objects themselves are fully recognized separately!

Color is another important visual capability. In another example of ‘features,’ the color of vision can be lost through damage to another region that is also responsible for shape. Perhaps shape and color are connected independently to the objects themselves since colors are recognized in the eye and aligned with the same object in this region. We still can recognize a tomato even if it were yellow, green or blue suggesting the shape pattern and its color are connected, perhaps through typical associations.

This capability for the brain to recognize features in specific brain regions supports the pattern-matching paradigm because entire patterns can be separated from distinctions that are important, but perhaps not as important for survival as others. This property comes up a lot in language understanding observations as well as sensory and motor control functions.

Discussion

Today’s article focuses on the dramatic difference between computers and brains as modeled.

The concept of Patom theory (PT) came from an attempt to explain how our brain works, based on observations of brain damage and active brain region visualization seen with medical imaging equipment.

Why do some regions of the brain become active when someone is thinking about something, but not currently experiencing it? It makes sense that stored patterns are accessed when matched again after recall from a higher level. Where is that activity? In the areas where the experience originally took place.

Why do brain regions appear at different anatomical locations across different people? As PT models these regions as the storage of links back to the sensory input, and those links could be stored anywhere with a connection, regions are allowed to be customized as each person learns new things through experience.

Why can some people who have lost memories regain them again, fully, after some time? If the patterns are disrupted, another path could be found to reestablish the original associations that were intact, but without a path the access them.

There are many interesting consequences of PT as a result of the bidirectional and hierarchical nature of brains needed to explain its non-computer-like nature.

Conclusion

The computational model has been tried for AI since digital computers were first created but without successful emulation of animal-like skills needed for robotics and language.

Processing itself doesn’t fit in a brain, since nothing is available to write a program and worse, nothing is visible that would distribute that program around a brain.

Equally, representation using computer-like memory doesn’t align with how we see our brain works from cases of brain damage and scans in medical imaging.

The focus on processing and representation in a way that emulates the human brain is needed to move from 1950s computer models to 2025 brain-like models. PT could be that model.

Do you want to read more?

If you want to read about the application of brain science to the problems of AI, you can read my latest book, “How to Solve AI with Our Brain: The Final Frontier in Science” to explain the facets of brain science we can apply and why the best analogy today is the brain as a pattern-matcher. The book link is here on Amazon in the US.

In the cover design below, you can see the human brain incorporating its senses, such as the eyes. The brain’s use is being applied to a human-like robot who is being improved with brain science towards full human emulation in looks and capability.