New language model for human conversation!

The explanation of 'contextual meaning' was a conference highlight

Linguistic Conference: RRG 2025

The linguistic conference in Kobe, Japan, has just wrapped up. Expert linguists from around the world gave English presentations of progress over 2 days in a variety of languages including: Japanese, Taiwanese, Cantonese, Breton, Vietnamese, German, Mandarin, Mexican languages, Taiwan Sign language, and a range of African languages. They all use RRG as the model of communications. Primary developer, Robert D. Van Valin, Jr., has continued work on and growing the global community since the early 1980s.

What makes Van Valin’s contributions so significant in the 20th and 21st century is its adoption of a model in which the words in a language link to its meaning with a single algorithm, regardless of the language. While all languages differ in vocabulary, phrases, writing and pronunciation, it is amazing that a single model explains the common features of them all.

In my view, this year’s breakthrough presentations were given in a paper “Towards a new representation of discourse in RRG” by Balogh, Bentley and Shimojo. This was then strongly supported by Van Valin’s keynote presentation, “Information Structure and Argument Linking Reconceived” that wrapped up the conference.

Breakthrough Model

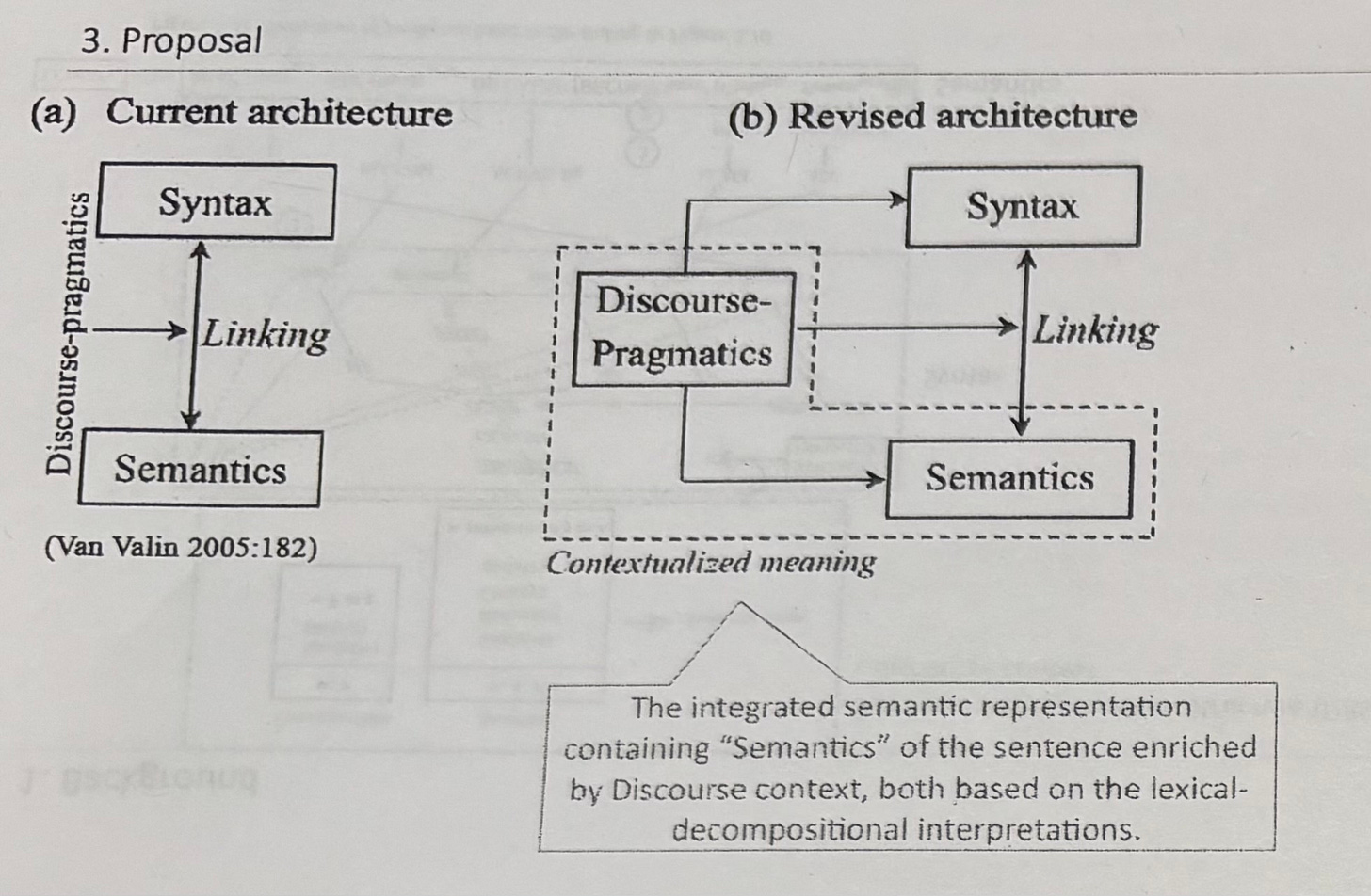

The new RRG model to represent discourse seems sensible, but the important thing in science is to align observation with its model, and then make predictions in-line with experiments. In this case the following diagram was proposed in the first paper, and reinforced with experiment evidence in the second, keynote presentation.

Figure 1 below shows the change in model.

This is scientific progress and in my AI applications I have yet to review the new model in detail as it applies to the existing conversation engine, but I am sure there are some key distinctions made in the paper that can be applied.

As a new proposal, Van Valin gives great support for this model in his conclusion:

“Balogh, Bentley & Shimojo have proposed a new way to conceive of the interaction of syntax, semantics and discourse pragmatics in RRG. I have attempted to build on their work in my talk today, as I feel that their approach is very promising and offers a solution to the problems with using DRSs to represent the ICG in RRG that they raise.”

In practice, it explains well-known observations in language, such as what the words ‘Mary did too’ mean in:

“John ate a chocolate. Mary did, too.”

Answer: “Mary ate chocolate, also.”

Applying Brain Function to automate question-answering

My talk continued my work in explaining Patom brain theory. Having a non-computational brain model hints at solutions to a number of otherwise unsolved scientific issues, like:

how humans recognize the meaning of words in language without needing a search engine

why language learning can be accelerated by tasks proposed by the psycho-linguist, Chris Lonsdale, to help adults learn an additional language

what is missing that has held up the key aspects of AI since the 1950s

and how RRG integrates with the system we built at Pat Labs in Australia with some key distinctions like its syntactic model, its semantic implementation and its strategy to create the Next Generation of language-based AI.

My full 45 minute talk is available on YouTube here including its Question and Answer session.

Let me go through the overview of these points, in turn, to summarize the talk.

Why don’t we need a search engine in our brain? What’s wrong in AI?

Patom theory models a brain as a pattern-matching unit in which any pattern can only be stored once, as the atom of that pattern. In language, this means there is only one representation for the letter ‘c’, for example. Don’t worry, there are many, many visual patterns allowed that connect to this single, higher-level pattern. All those images mean ‘letter c.’

AI hasn’t progressed much against the goals set for the field in the 1950s, as evidenced with a lack of robots walking around doing things for us. Where are the robots that are cleaning, driving and performing manual and skilled human work? Where are robots that talk to us in any language that understand what we need?

Generative AI, such as ChatGPT and others are lacking in key elements from linguistics resulting in very expensive and wasteful uses of power to drive GPUs and a need for datacenters to perform the statistical work necessary for generative-AI’s design.

The generative-AI engineering is ready to be replaced. Solutions such as mine that can run on laptops, watches or mobile phones, are supported by experts in machine learning and AI who see the limitations of generative-AI.

Language Learning Progress

Announcement of the ‘Speech Genie’ product! Speech Genie will continue the work proven to help Mandarin speakers in learning English. To follow our work, you can go to the new Speech Genie web site here (click).

Speech Genie is preparing to build a new product that continues the work first validated for English learners in China, and this time, it will integrate the Pat Labs system I have been developing since the early 2000s. The AI engine will allow the generation of sentences based on the needs of the course developer to interact with the user’s progress, but without concern that the samples won’t be correct.

This merger of AI techniques underpinned with RRG’s linguistic science will enable tests against some long-held observations about adults capacity to learn additional languages and promises to continue the benchmark goal:

“Learn any Language in 6 months!”

What is my thesis for stalled AI progress?

Lack of progress with brain science has held up all aspects of AI, since the computer model brings with it fundamental limitations for biological brain emulation. Even early animals like fish can deal with multisensory objects in context. Such skills enable survival for predators and prey and reproduction of the best specimens.

In my video, I show the large contrast between today’s bipedal robots and animals like dogs. The dogs possess amazing capabilities of motion control and object tracking while robots appear unstable and slow moving.

The development of sensory and motor control, such as with a brain model like Patom theory, promises to enable integration with robots of the future to also support human language emulation.

What does RRG give us to hold a conversation?

The system and its demonstration in the associated video uses the fundamentals of RRG to recognize words in a language and map them to a semantic representation in context using a dictionary. Like the science of signs, semiotics, the system converts from text to meaning and the reverse, from meaning to text.

It takes advantage of my latest patent that explains the mechanism to do this efficiently on a computer system.

While today’s generative-AI systems do not embrace the concept of Immediate Common Ground (ICG), the concept of conversation in the current context only, the addition of that key element promises to enable the Next Generation of AI that uses the meaning of language, potentially in many cases with only very limited knowledge required.

In my talk, a range of alignments between RRG science and its interaction with a word-sense-based semantic model were discussed.

Wrap Up

The RRG conference this year was an amazing event to participate in.

A room full of experts in RRG with specific research into their mother tongue, other languages and endangered languages meant a wide range of eye-opening variations and analyses.

It was sad to leave the wonderful country of Japan and the majestic beauty of the cities we visited, Kobe, Tokyo and Shimoda.

But the progress in RRG science also leads towards the next steps which for me are the commercialization of a language-learning system that will exploit the AI service I have overseen for a long time.

And the alternative to today’s error-prone AI like ChatGPT promises to reduce the need to build datacenters everywhere which will greedily increase the need for electricity and water everywhere in favor of a low-powered, accurate AI.

Rather than running expensive machine learning, AI based on cognitive science will incorporate the RRG model and others to bring a myriad of important tools to market using human language.

Do you want to get more involved?

If you want to get involved with our upcoming project to enable a gamified language-learning system, the site to track progress is here (click). You can keep informed by adding your email to the contact list on that site.

Do you want to read more?

If you want to read about the application of brain science to the problems of AI, you can read my latest book, “How to Solve AI with Our Brain: The Final Frontier in Science” to explain the facets of brain science we can apply and why the best analogy today is the brain as a pattern-matcher. The book link is here on Amazon in the US (and elsewhere).

In the cover design below, you can see the human brain incorporating its senses, such as the eyes. The brain’s use is being applied to a human-like robot who is being improved with brain science towards full human emulation in looks and capability.