Animals survive due to their recognition of the world through senses and effective motion. A brain theory needs to explain how this works. It starts with a discussion about representation.

When a computer scientist is asked about how to represent a 3D object, their default approach is to use 3D coordinates and other attributes. That converts an object into a mathematical problem.

When today’s AI experts are asked that question, they ask for data - lots of 2D images that include the object in question from various angles and some labels. It makes the representation of 3D objects into a statistical problem to be solved. An artificial neural network links to the label via the probability of an image matching with it within the training data.

A third approach is Patom theory (PT), a brain model looking to represent vision as a collection of real-world objects to be recognized with any associated sense - vision, hearing, touch, and more. When you ask someone questions about vision, they tend to rely on their knowledge of the world to determine relative sizes. Deficits seen with brain damage expose significant detail of where visual recognition takes place.

A lot of our AI work has started with mature sciences like computers, math and logic, but if they don’t fit, other choices should be made. The starting point in science should be an open mind. If math isn’t working, try something else. If data leaves fundamental limitations, try something else! Try to keep the timeframes to pursue a theory to a few years at most, not decades. In fact, try many things in parallel as we don’t need to show one model doesn’t work before trying another. That’s competition! As brains recognize multiple objects in our field of view with their color and motion along with other contextual details, any proposed model should explain how that happens.

Patom Sensory Tools

In science, the simplest explanations are the best starting point. Rather than looking for an arbitrary solution, let’s assume we build on what we are given. A brain doesn’t receive labeled data, nor does it receive mathematical coordinates to recognize objects.

For vision, we are given a few things - the visual field using rods and cones in our eyes that indicate greyscale and color, respectively. The image received changes over time based on our position in space and the movements of objects around us. We also get the position of our eyes in focus, such as in stereo vision <nature.com/article>.

The information available for control of vision can be compared with other needs. Motion in an animal isn’t just the result of muscle contractions. We also have touch senses like proprioception that indicates the position of our limbs and we get balance and motion information from our inner ear. We get indications of an object’s weight by the amount of muscle contraction needed to hold it.

The point is a brain has a variety of sensory information available to understand and move in the world.

This leads to an explanation of the difficulties in AI and robotics. Senses and motor control fit into Moravec’s Paradox <thelancet.com/article>. The paradox:

the phenomenon … in which tasks that are easy for humans to perform (eg, motor or social skills) are difficult for machines to replicate, whereas tasks that are difficult for humans (eg, performing mathematical calculations or large-scale data analysis) are relatively easy for machines to accomplish.

Today’s robots remain limited in their movements and sensory skills decades after Moravec’s paradox was identified. Similarly, today’s machines, even LLMs and other deep learning models, fail to emulate human-levels of understanding and conversation.

Evolution starts with simple animals and simple brains. To evolve into the human brain—with mathematics and language—there should be a path of mutation back to those simpler brains. That’s the easiest approach.

But in the evolutionary record, there doesn’t appear to be any support for the brain as a mathematical processor, when mathematics and human language can be explained better as pattern-matching models with a little effort!

The PT model views the brain being given multi-sensory input. The input links together to identify objects and requires pattern decomposition to atomic level. This means patterns are stored uniquely. Each pattern atom represents a single pattern and no more.

A motion pattern can therefore be intricately connected to a balance pattern for use in motion adjustments. A visual pattern can be intricately connected to a somatosensory pattern to accurately identify how to move a limb to a perceived location by connecting the ideal motor pattern.

With pattern-atoms, each pattern in the system can be composed with its parts, and each part can be used throughout the system with a single association. The majority of patterns therefore are stored at the edge of the brain network where they connect to the sense itself. Uses of patterns are difficult to see because the brain links back to those disparate locations that apply PT’s single algorithm: a brain can only store, match and use hierarchical, bidirectional linked patterns (sets and lists).

Senses are refined machines

A friend of mine through the internet, Lloyd Watts, is a successful entrepreneur and expert in many areas including brains. He explained to me how the human ears are precise auditory machines. One of his companies went public in an IPO using his emulation of our hearing.

He mentioned to me that my theory is the antithesis of his in that human hearing is like a perfect machine, while my brain research points to the brain being a perfect blank slate as it relates to many functions constrained by anatomical consistency.

I think we are both right. I call the principle…

Watts’ Law: In a human brain, the older parts are very effective machines. Hearing, for example, uses elements that are excellent at sound processing from receipt to transcription into neural impulses. This results from the 500 million years of evolution that many animals inherit, refined through useful mutations.

Vision is a sense that evolved to very high standards over the millennia, and humans have a good version of it to work with. The neurons in the eye and brain are therefore very well tested to perform the task of vision. The far newer extensions to the brain in the human neocortex don’t need the same type of improvements because each region shares similar anatomy. Their function is determined by their anatomically gifted connections but somewhat imprecise starting points.

Vision

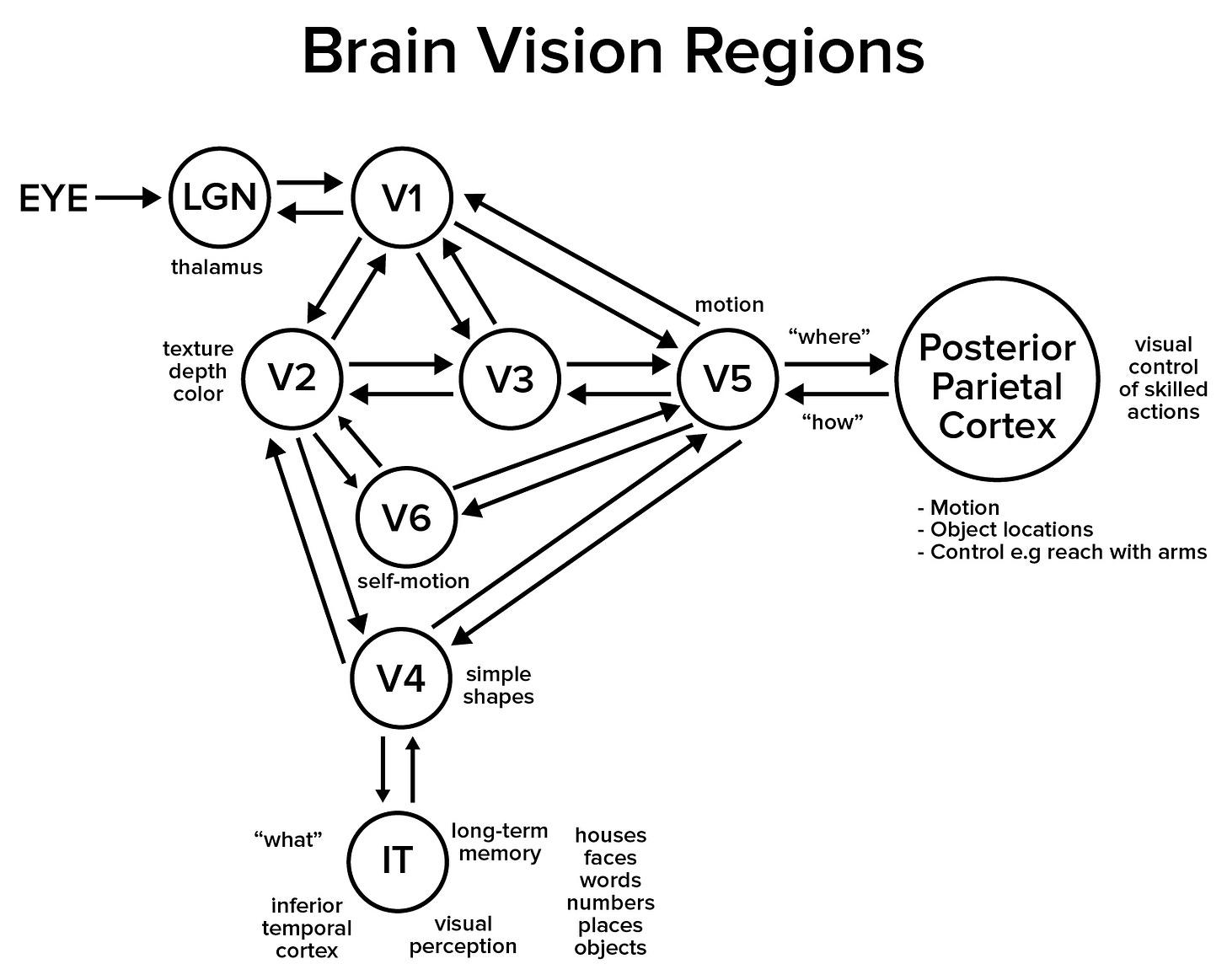

In vision, neurons in the human eyes signal through the optic nerve into the brain. Figure one below is a simplified representation of the human brain’s vision regions.

In Figure 1 the V5 region, if lost in both hemispheres, impacts the brain’s ability to see fluid motion. Patients see an object, then it vanishes and reappears closer. This repeats. The deficit makes it dangerous to navigate in traffic for example.

IT (Inferior Temporal Cortex) Damage

Losses to the IT (the ‘underside’ of the temporal lobe) can result in an inability to read or an inability to recognize human faces!

This type of damage is evidence that the brain’s visual system is specialized to match stored patterns. Patterns of known faces can be stored in the IT and validated through connections in other regions. Those with face blindness can usually identify people by their voice, showing the full pattern remains, just without the visual face recognition part.

PPC (Posterior Parietal Cortext) Damage

Losses to the PPC cause a range of deficits. Related to vision, visuospatial deficits see a patient having difficulty with tasks requiring visual and spatial integration, such as judging distances and sizes. Imagine trying to pick up a cup, but your hand misses!

Visual Illusions

In Figure one above, sometimes damage to the IT or PPC or earlier can result in visual illusions - distortions in form, size, movement, color, and combination <Principles of Neurology 7th Ed, P489>. Motion illusions are more typical in IT damage. Occipital and PPC damage is more associated with single objects appearing as two or more objects and retained images. Tilted or upside-down recognition is also possible.

Visual Hallucinations

Hallucinations such as imagined flashes of lights, colors, and shapes that can be still or moving are possible with damage to all the cortical areas in Figure 1. Hallucinations of objects, persons and animals is possible in addition to the lights, colors and shapes.

Conclusion

Senses are one of the “hard problems” in AI. They are needed for robots to navigate their environment effectively—with the skill of animals. If we can model all the senses with the same approach, the reproduction is simpler and aligned with the human brain whose regions for sensory recognition look somewhat similar. Patom theory treats the similar anatomy of brain material as evidence of the same functions taking place.

There is an opportunity to use PT to try to account for the vast number of brain deficits observed in the medical literature with improved region specificity when damaged.

In terms of vision specifically, it makes sense to look at the entire brain function to support theoretical specifics of sensory function. For example, our ability to recognize object distances for manipulation appears to be a combination of visual and tactile senses—input to our motor control.

For these reasons, Patom theory provides an effective starting point for robotics and other AI emulations.

In my next article, I will explain why 'Language learning needs brain science.'

Language learning needs a machine that can generate and understand language in ever-increasing complexity. As language learning improves for students, so will language interactions for any human purpose. Robots with sensory and motor control can then add the hardened language that has been tested and improved through constant student interaction in a safe, low-risk environment.

Do you want to read more?

If you want to read about the application of brain science to the problems of AI, you can read my latest book, “How to Solve AI with Our Brain: The Final Frontier in Science” to explain the facets of brain science we can apply and why the best analogy today is the brain as a pattern-matcher. The book link is here on Amazon in the US.

In the cover design below, you can see the human brain incorporating its senses, such as the eyes. The brain’s use is being applied to a human-like robot who is being improved with brain science towards full human emulation in looks and capability.