Why AI doesn't progress

...ignoring science and its real goals is slowing progress

AI was named in 1956 with the goal of emulating human capabilities by investigating whether computers could be programmed to exhibit intelligent behavior. Today, there are debates on the machine learning approach: is generative AI nearing AGI? AGI is defined as human-level capabilities like sensory-motor control, consciousness and human skills like language. It was previously known as ‘strong AI.’

In my latest book, chapter 18 reviews the killer AI apps that have not been completed, such as:

driverless cars,

speech recognition and

chatbots at human level.

They are the immediate goals of AI, not performing science experiments and solving all problems humans have yet to conquer like cures for cancers, enabling everlasting life, and engineering faster-than-light travel.

Explain to me how a machine that is trained on the statistics of the Internet and other corpus will create science!

Today there has been a split between two approaches to AI:

the ‘AGI is here soon’ camp if only big tech companies work a little harder, versus

those experts in AI and AI research who believe the current approach to AI is never going to reach AGI.

Experts in AI research believe the current AI will never get to AGI, while executives and investors in AI-related companies argue AGI will be here in the next couple of years.

AI is not progressing

I don’t think it is provocative to point out that AI has hardly progressed since 1956 against the goals set at the time.

It is easy to see dozens of announcements from companies that explain their latest releases, but comparing progress against the original goals tempers volume with progress.

We have speech recognition in production that generates many errors. The words rhyme, but often are meaningless word sequences. Worse, those errors can’t be corrected with language, but default back to typing!

My rule is that science is needed to solve difficult problems before engineering can implement it.

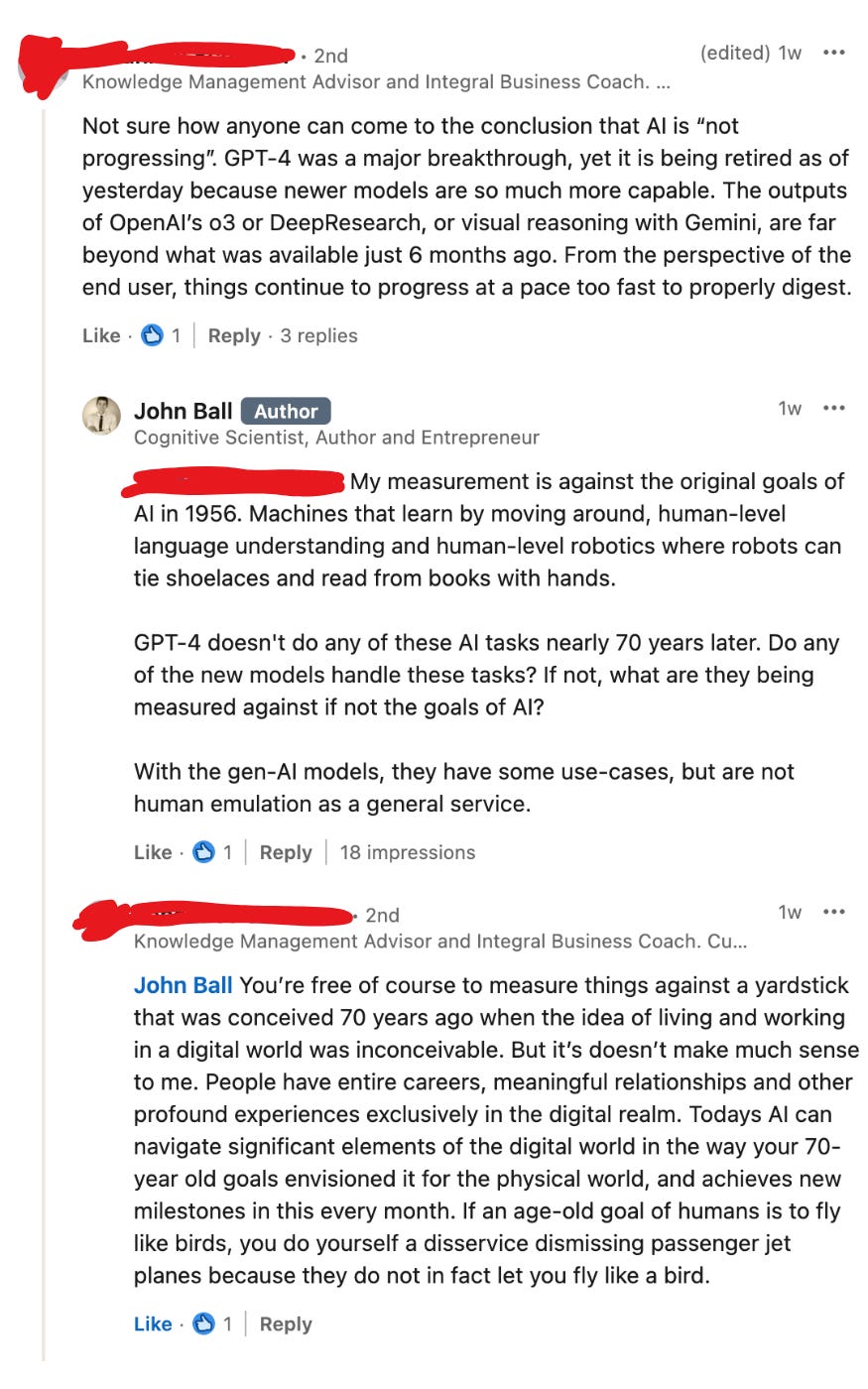

The narrative in today’s social media can be seen in the interaction below as a response to my typical observation that AI is not progressing against the original goals of AI:

My response focuses on what AI is about and its goals. If you call today’s error-prone statistical systems, AI, then the meaning of AI should be considered further to understand what AI is about.

Today's big Tech tools cannot do the basic functions that were aimed at 70 years ago. Those are the killer AI-apps.

Bird versus Plane

There is a fundamental difference between flying like a bird and today's jets (they both fly); and a person and today's robots (one has self-controlled, learned motion and one does not). We need to focus on the function of machines.

Today's speech recognition doesn't work like a person and cannot be used to speak/converse in any human language.

Today's chatbots cannot understand human language and make errors in recognition of simple sentences - human context is missing especially from gen-AI as can be seen with spurious responses to words like 'Alan Turing' where humans say 'which one?' and chatGPT writes a paragraph about the mathematician and computer designer who died in the 1950s.

Progress at arbitrary benchmarks doesn't mean progress. It may move the needle in some direction, but the goal remains infinitely far away. The fundamentals need to be solved first.

Conclusion

AI will be here soon is one camp, predominantly led by big tech CEOs past and present. They offer a vision of machines that solve everything: cancers, science and engineering. There is no evidence that any currently popular technology is on that path.

Another camp is led by the experts in science and engineering. Look at Universities not funded by tech giants or startups and ask their experts what they think. The AAAI is an expert body whose recent survey of experts supports a view that:

today’s AI is not on the path to AGI!

I argued in LinkedIn that use cases for statistical AI—for those who do a lot of work on the internet—do not amount to AI progress against its goals. Maybe killer use-cases will be found, but why call it AI? Calling it AI allows others to claim that it is intelligent and that it is about to solve problems needing super-intelligence. It isn’t.

Let’s get back to the world where automated functions are considered on their merits. If we want to talk about AI, let’s not change its definition to exclude the obvious goals from the 1950s that are still needed for the AI revolution being promised.

Often the market value of generative AI is coming from a perception that AI (the original versions) is being solved. But as a growing number of experts are highlighting, it isn’t being solved by the statistical models.

Aiming at the solution of AI is a worthy goal in science and engineering. Claiming some technology, that clearly isn’t on that path, is on that path may help with marketing and corporate valuations. It may be popular, if only we can fix all the current limitations, but claims of success can be misleading until the insurmountable problems are solved.

Do you want to get more involved?

If you want to get involved with our upcoming project to enable a gamified language-learning system, the site to track progress is here (click). You can keep informed by adding your email to the contact list on that site.

Do you want to read more?

If you want to read about the application of brain science to the problems of AI, you can read my latest book, “How to Solve AI with Our Brain: The Final Frontier in Science” to explain the facets of brain science we can apply and why the best analogy today is the brain as a pattern-matcher. The book link is here on Amazon in the US.

In the cover design below, you can see the human brain incorporating its senses, such as the eyes. The brain’s use is being applied to a human-like robot who is being improved with brain science towards full human emulation in looks and capability.