The “LLM Revolution” (Large Language Models) is built on a fundamental compromise of accuracy. Statistical word sequences don’t reflect how languages work. I don’t think that’s a debatable topic, since the science of linguistics is very strong on how languages are put together.

Languages are based on a hierarchy of things: sounds (phones to phonemes to syllables) or characters (letters) or touch (Braille); to words (morphemes to indivisible sequences); to phrases (syntactic sequences of words for some reason); to clauses (meaningful sets); to sentences (meaningful sequences of words making sense in context).

Nobody ever said that word sequences alone make up a language — except maybe computer scientists who haven’t studied linguistics! That’s not the science of language. The attempt to get sense from word sequences was a half-hearted attempt to improve on the limitations of the day with rules-based models around 1990.

There is an excellent read on the swap to statistical approaches here, A Pendulum Swung Too Far, by Kenneth Churd of Johns Hopkins University. And his presentation is here. (Thanks for your reference to this some time ago, Walid Saba!)

Both of the approaches to AI in the article, discussed in 2007 when written, are formal science: theory versus data. Natural science, such as brain science, is a part of biology as are the cognitive sciences. That’s a third option, normally considered too difficult because we (computer scientists) don’t understand how the brain works. The linguistic models usually incorporate meaning within a semiotic model (signs connecting to meaning) in context (semantics with constraints on what has been happening).

This isn’t a criticism of computer science, other than to point out that language is studied intensively in the cognitive sciences, as is brain science and other empirical sciences supported by experimentation.

Let’s look at how the errors introduced with Google’s 2018 transformer model (written by 8 Google ‘formal scientists’) hold back AGI as seen in LLMs.

Transformers aren’t enough

The famous paper that ushered in the exponential use of electricity was published by Google in 2017, or its Wikipedia page here.

My joke about exponential use of electricity comes back to the transformer’s use of GPUs to perform insane levels of computation that are unlikely to ever be commercially viable, as seen in today’s OpenAI USD 5 billion annual loss.

“the company [OpenAI] expects to lose roughly $5 billion this year”

The Transformers article above proposed a model that improved on the previous use of word vectors. Rather than using static word vectors (multi-dimensional numbers based on cosine similarity) as in Word2vec and Glove that merged together the meaning of ambiguous words, or was improved by creating different vectors for the same word with ELMo by the Allen Institute. Transformers add the sequences of ‘words’ to differentiate between the same words and different word vectors as part of the mission to predict the next word, but human brains don’t use language like this.

Ultimately, language doesn’t work like this at all. The addition of semantics (meaning) and pragmatics (context) is essential to understand human language.

It is fun to see how far the billions of dollars can help the LLMs create something, except for the fact it is holding up investment in the solutions the AGI community is impatiently waiting for. What’s not fun is that it also promises to burn the planet as it goes.

These models create a mechanism to understand the statistical similarity of the letter sequences in some corpus, along with those in a set or list, but they exclude meaning and context as a human defines it.

Transformers versus human language

All you need are words, their meaning, and experience with the knowledge they compose into based on our experience of the world (context).

Semantics (meaning)

When a human learns the meaning of a word, it becomes associated with some multi-sensory thing (objects or referents) or some property or event (semantic predicates). The word ‘run’ means someone moving quickly with perhaps no foot on the ground at some point, while ‘someone’ refers to some human being, a person who is expected to know a language, to move under their own power and so on.

Pragmatics (context)

To learn a language, a human uses their power to understand the world, the current context, and join it up with the meaning referred to. If ‘someone’ is always referred to as being on planet Earth, context expects that someone to be on Earth. If ‘running’ takes place outside, and not in a living room, ‘running’ will be expected to be somewhere other than a living room. Of course context readily allows an increase in possibilities, but the known features of context limit our expectations.

Predicates determine their arguments

I wish the vocabulary of linguistics were better known. Predicates relate things. In the old, ‘syntax terminology,’ semantic predicates are verbs, prepositions, adjectives and adverbs. But the meaning of the thing a predicate relates — its argument — cannot exceed the expectations we learned as a part of the language.

In “the happy situation,” happy doesn’t mean laughing and jolly, since situations cannot do that. The kind of happy referred to is more like a situation where participants become happy. A happy child, in contrast, isn’t referring to a situation, but a young person. The young person is in some jolly (happy) state.

In my language system, the meaning of the words that comes from semiotics (the science of signs) forms the basis of patterns, sequences that have been experienced before. In a typical sentence as massive numbers of predicates that limit the meaning of phrases as well as the sentences themselves to be consistent. And those consistent patterns need to be valid in context as well or they need to be questioned.

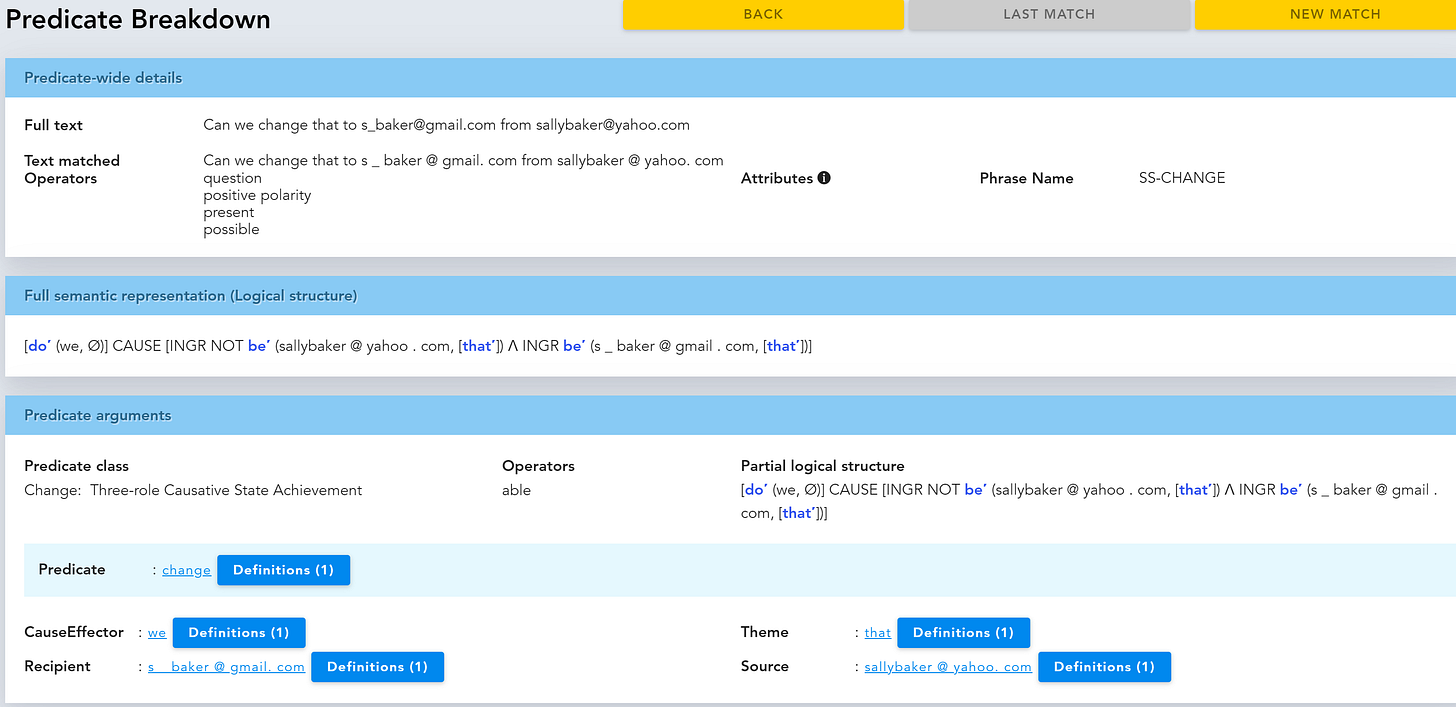

In the following image, the predicate ‘change’ constrains we, that and the email addresses.

The use of the constraints is nothing new in linguistics, but its application has been difficult for a completely different reason. Most linguistics today has a strong dose of history baked in, that blocks progress due to errors in the early approaches that is locked down. For that reason I have adopted Role and Reference Grammar (RRG) to use a single cohesive approach driven by a single team across multiple languages.

Linguistics science was stuck on the use of parts-of-speech at least since the 1950s. Once those elements are discarded (nouns, verbs, adjectives, determiners, adverbs, prepositions, etc.) the computational complexity goes away as long as context and semantics are added in. No requirement to burn the planet.

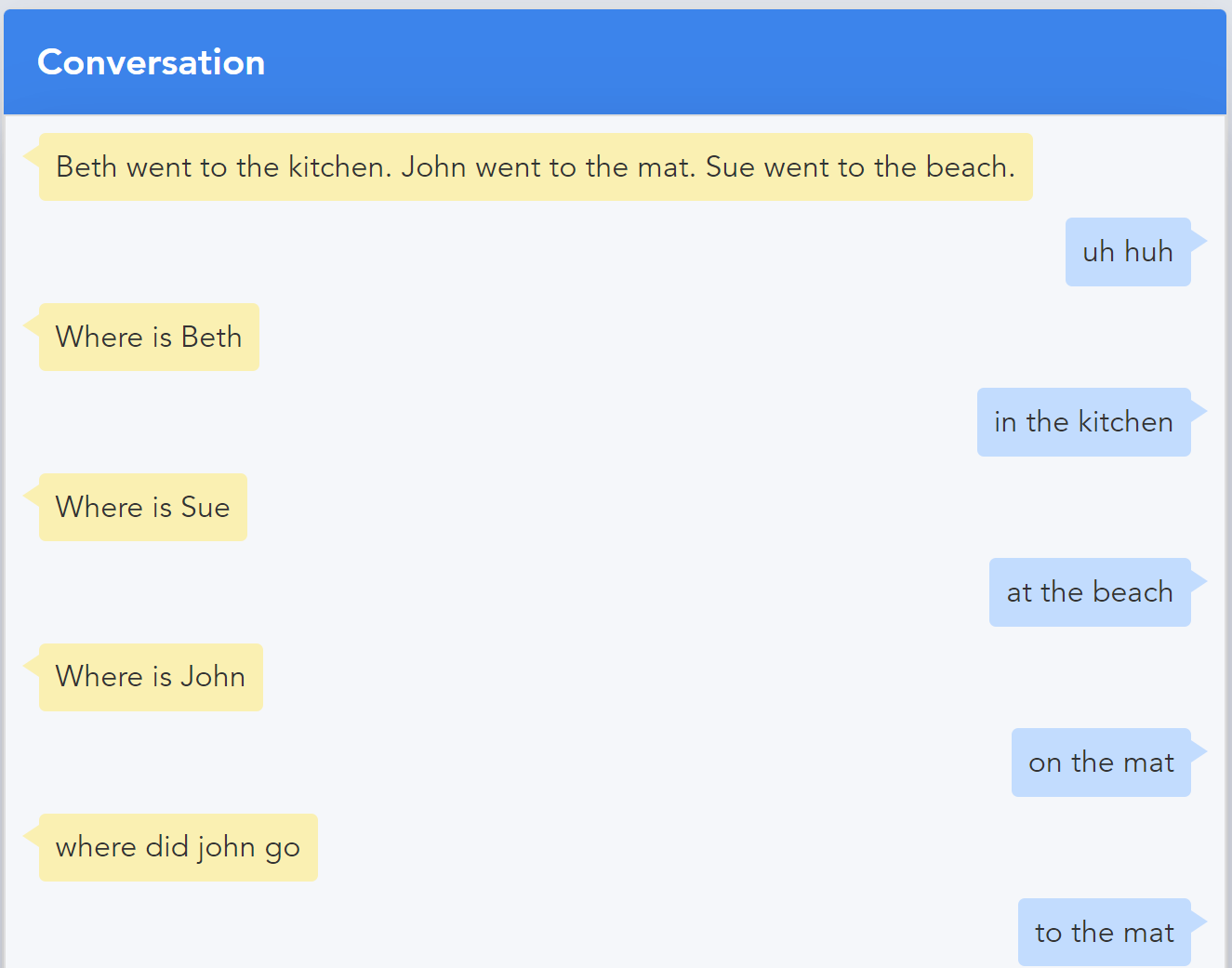

But in conversation we know what to expect, and in the conversation below, the use of meaning in context with a language generator produced human-like answers.

Conclusion

Since 1990 the focus on getting value out of statistical systems has become more intense. Even given almost limitless amounts of money, the outstanding problems with todays AI technologies can be traced back to their approach—formal science. If we factor out meaning and context, we shouldn’t be surprised that errors (hallucinations/confabulations/making stuff up) is a direct consequence.

If you were to ask the experts in today’s large corporations promoting LLMs if they think they are on the path to AGI, they likely would say they are. But if asked how they deal with the lack of human-like semantics and context can ever be solved, what would they say?

If we focus on the goal, creating human-like interactions with human language and knowledge, the scientific method gets us there. It’s a focus on the natural sciences versus the formal sciences.