Today, let’s explore a better way to think about brains, and therefore, AI.

Spoiler: analyzing brain regions is better than analyzing neurons.

In the 1990s I finalized Patom theory (PT), a brain model built on the wealth of information we had amassed in the cognitive sciences such as from popular scientist Baroness (Susan) Greenfield. The function of a brain region became central to the theory because damage to brain regions causes predictable deficits and scanning of normal brains shows specific regions involved in specific tasks.

In contrast, the last few decades of AI development have focussed on neurons and how they work in artificial organizations. Machine learning dominance has led to an ongoing reliance on these models despite their known limitations. For example, their opaque, black box operation can result in highly probable responses that are wrong (hallucinations).

An upcoming article discusses the human split between meaning and knowledge to address the hallucination problem that is often seen in LLMs.

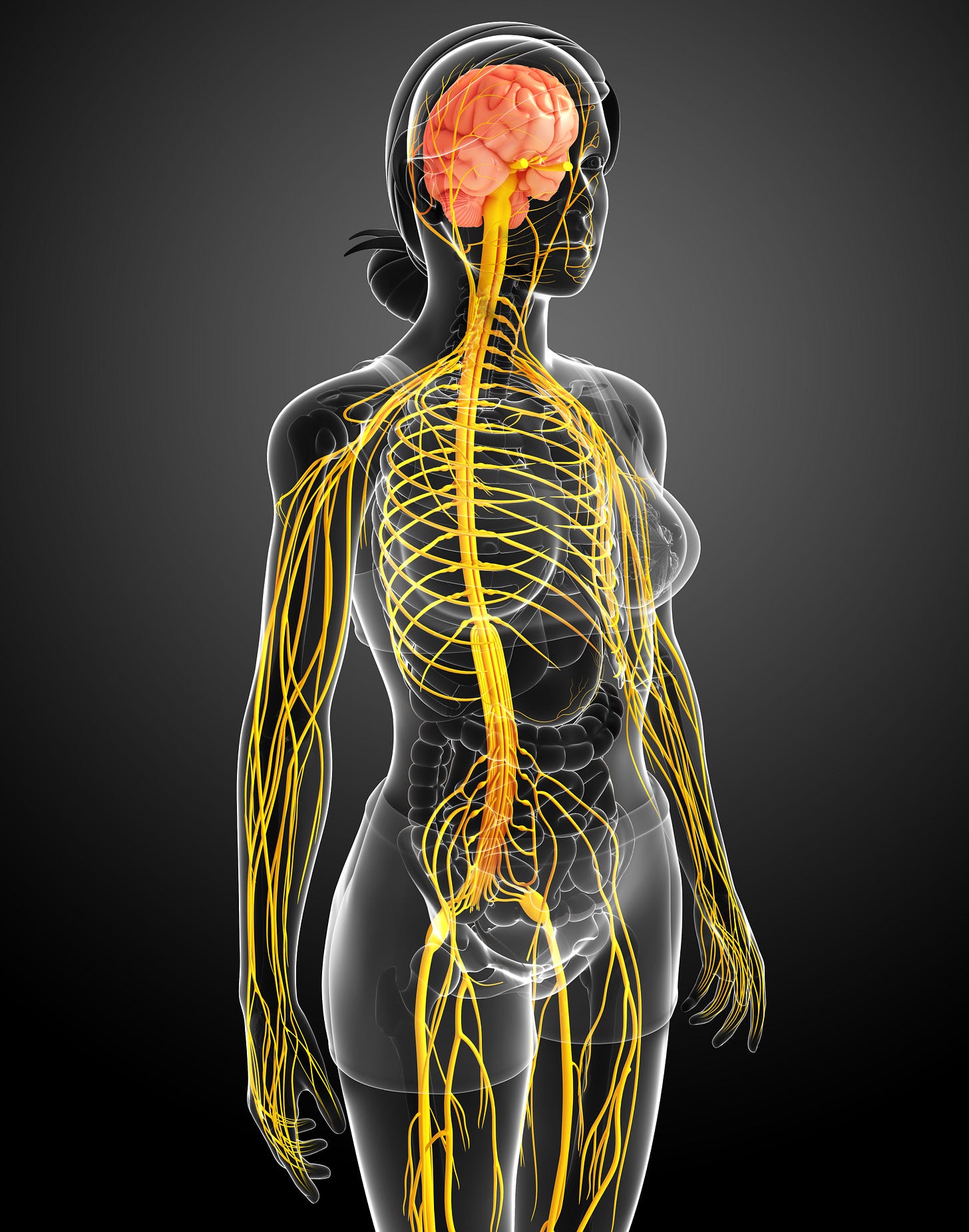

Next, let’s compare a muscle’s movement of the human body with the neuron’s control of the human brain to illustrate the brain region’s role: an interconnected system.

Understanding Systems

To understand the body’s motion, we need to consider the system that drives it, from sensory input to the resulting movement.

Consider setting up an experiment where we want to measure the reason a right arm is lifted. We hook a student up to our monitors to capture when muscles activate and then create graphs to understand why the arm is moving.

Now in a classroom, imagine a teacher asking the class to raise their right arms. The monitoring gear goes crazy as the arm moves up, and then, back down again. The graphs are generated after the arm stops moving (the class ended) and analysis begins.

The scientists analyze the graph and see that their measurements show every 4-5 minutes the arm is lifted, held for about 10 seconds, and then lowered. Then, after about 50 minutes of activity, nothing else happens. Despite the amazing technology, there is nothing in the data that explains why the arm is being lifted in this strange way!

The point is that our brain is a system of sense inputs used to understand what is happening, and motor outputs to move our bodies. If we set up an experiment and leave out vision and auditory input, we could miss the reason our body moves. In this case, the teacher’s command, “Raise your hand!”, is received in the subject’s ear, converted to neural signals received by the primary auditory cortex, then recognized as words in the temporal lobe, converted to meaning near Wernicke’s area, and following the brains activation of the arm lifting pattern, the motor control in the cortex signals to move the arm, via the brainstem.

The monitoring equipment needs to be expanded to track the brain’s actual function in moving the arm.

This is somewhat like the problem we see today where some scientists are looking for the processing functions inside a neuron, rather than looking at the brain’s system that operates between brain regions.

Why neurons are the wrong focus

Neurons aren’t processors. The computational view overlaid onto brain models makes us look for processors when none are needed and has led to today’s computationally intensive systems driven by machine learning.

In recent years, there have been stories of how workings in the nucleus of a neuron perform the complex computations behind brain function. Some propose dendrites to explain the brain’s computing power. Others propose that the glial cells in a brain are involved in the “intelligence of a species.” Still others claim that the many connections between neurons in the synapses house complex processing units that explain brain processing. More still propose to align brains with the quantum world.

Ockham’s Razor claims the best scientific model is the simplest one:

“Occam’s razor is a principle often attributed to 14th–century friar William of Ockham that says that if you have two competing ideas to explain the same phenomenon, you should prefer the simpler one.”

Rather than the quest for the Holy Processor in our heads, the better approach is Patom theory’s model—it’s simpler. In PT, patterns are stored where matched and signal when matched again as part of a larger pattern.

Language comes from meaning, but what’s that?

In PT, language emerges by labeling what a brain already knows (meaning), and then learns patterns on top of that, from patterns of words and/or meaning (syntax).

AI needs meaning, like in a brain, to emulate a human brain.

In the same way that a dog’s brain moves them, so does a human’s brain. Language is just an evolution on top of a mammal’s brain, not a revolution. On that basis, what representation does language sit on top of and what meaning can it access?

What does it mean when we do some kind of complex task—to play the piano, think about a problem, or eat at a restaurant? Let’s look at a baseball example to get an understanding of the meaning of a complex motion.

What does it mean to pitch a baseball?

To pitch a baseball, you pick it up, move to the mound if there is one and stand on the rubber. There is a large number of further things to do: check the catcher’s signals, perhaps stare at the batter, and check for on-base runners. Now get ready for the wind-up!

All the steps can be recalled from your brain’s experience in pitching and then described by language. Language allows your brain to describe each step even if the experience isn’t linguistic. The meaning of a pitch includes the experience of doing it — including the full sequence of sub-steps.

Our brain can convert the experience to language, in as much detail as is needed.

PT explains events like pitching to be stored as meaning as any other animal would do. Pitching means all the steps described and more. We can learn pitching by watching it as well as doing it, and can expand it with additional experience.

Events mean the steps and their sequence that we can describe in language. Beware, however, that it can take A LOT of words to describe an event.

In terms of PT, patterns are connected and a pitch is composed of (sub)patterns. Language can describe each (sub)pattern by converting it into sentences of language.

An event is something that involves a sequence of steps, and our brain can describe each step with language. It seems language can access ANY meaningful brain area and convert it to words and because the meaning is more general than language, there are many ways to express it.

Deficits

What are brain deficits? Our brain is quite well protected, but damage can still occur. There are a vast number of deficits, but some that AI needs to explain at least as well as PT include:

the visual loss of color recognition (and imagination) - (achromatopsia)

the loss of visual motion (motion blindness)

the loss of recognition of parts of an object, but not wholes (form agnosia)

the inability to recognize more than one object at a time (simultagnosia)

the inability to recognize familiar people’s visual faces (prosopagnosia)

loss of word pronunciation (Broca’s aphasia)

loss of comprehension (Wernicke’s aphasia)

What is it about the myriad of deficits explainable by knowing the region of brain damage?

Reverse Engineering a brain

To reverse engineer a brain, the analysis differs if the brain’s collection of neurons is: (a) providing a processing function versus (b) regions for pattern-matching, using, and storing.

How would these processing neurons be programmed?

A brain region reflects brain anatomy. The regions evolved to be general pattern-matching units. Anatomy is biologically constrained to connect general regions together each time, and evolution ensures the anatomy’s effectiveness.

We know from neurosurgeons that anatomical brain regions can perform different functions, depending on the individual. In some individuals, a brain region seems to do nothing, while in others the patient relies on it. This involves the concept of plasticity.

PT explains plasticity with the storage of patterns—they can be stored anywhere in a connected region. Depending on experience, patterns are stored to extend existing patterns. For people who are actively involved in, say, physical sports, their brains store a variety of motor control patterns. They also store higher-level versions of them: patterns that determine the sequence of motor control patterns to use.

fMRI experience

A brain scan, such as from Functional Magnetic Resonance Imaging (fMRI), measures activity in a brain, by measuring changes in blood oxygen levels.

Listening to language differs to speaking language, of course, since motor control is needed for speech (moving our mouth, voicebox and so on) and listening involves receiving audio input and determining what it means in the current conversation.

When a patient is talking, a brain scan indicates a wide number of active brain areas, including the regions for comprehension and spoken speech.

fMRI is a good tool to see the areas a brain uses, but is it showing us that regions are: (a) performing some kind of computation or (b) matching patterns and signaling its connected patterns of the match?

(a) Brain as processors

In the computational model of a brain, the active regions represent some kind of computation that we don’t understand. A brain region receives data from other regions that are then processed in this region. Output is sent as data to other brain regions.

There is no obvious flow from input to output as in a computer. Why not?

Under a processing paradigm, the use of regions relates to the processing effort necessary and connections enable the transfer of data between them.

(b) Brain as pattern-matchers

In a pattern-matching system, an expected fMRI display would show the active regions signaling their bidirectional brain regions that also become active. Active sensory regions determine complex experiences by linking to multi-sensory patterns.

Regions will also be active upon the recall of sensory input! Multisensory patterns when activated link back to their sensory constituents. Multisensory patterns lead forward to combinations or sequences of those combinations. Brains don’t have an endpoint.

Motor output generates the movement of the animal. PT’s hierarchy allows pattern refinement and efficient distribution.

In the case of an event, one or more of the associated patterns are being matched for potential use. Does an fMRI show those patterns? That’s hard. It would need to follow the neurons to see what the reverse links represent as well as the forward links to see what that pattern contributes to.

I saw evidence of the pattern-matching paradigm in a scan. It showed that while thinking about motion, even without actual motion, the cerebellum (which enables smooth motion) becomes active.

Meaning Representation

Grandmother cells

One of the exciting proposals in neuroscience was the search for specialized neurons that fire1 upon seeing a particular person (the so-called Grandmother cell or Jennifer Aniston neuron)!

Let’s say an experiment today shows that a particular cell activates when given an image of Jennifer Aniston. The quote below is hypothetical:

“The patients, who were fully conscious, often had a particular neuron fire, suggesting that the brain has Aniston-specific neurons.”

Context (Predicates and Referents)

Referents are things our brain experiences, like Jennifer Aniston (JA). A brain eliminates ambiguity relating to referents: when it stores knowledge about something, it doesn’t bleed over into similar things. JA memories don’t get confused with things done by Halle Berry! This is context — everything is consistent. Context can add a wealth of information about a referent, using relations/predicates. Examples of predicates for JA is that she is ‘a blonde’, ‘pretty’, and was ‘in Friends.’

Computational Grandmother Cells

How does the processing model explain activating grandmother cells for a particular recognition? Do we assume the neuron that fires is the processing element for its meaning? Perhaps it would suggest that the cell in question is some kind of database index - whereby the record of some individual is stored.

The neuron-is-a-computer has a problem: how did the brain’s neuron learn what it knows? Who wrote the program to store its data and why would one neuron take on a particular task over any other?

Pattern-matching Grandmother Cells

PT predicts that one or more cells will activate upon recognition of the multisensory object. The way PT stores meaning, if we activated the Jennifer Aniston cell the forward projections would connect to the patterns from the context of her, and the backwards ones would signal to sensory experiences of her. So the brain would have a number of active regions as the result of the activation of a single patom.

To find the Jennifer Aniston cell in a brain, the various associations would need to be excluded in PT. The better you know someone, the more associations are predicted. Brains don’t have internal labels for their connections, which makes understanding the activation harder in a scan!

The referenced Wikipedia article discusses some research but in it, the purpose of cell activation was not explained. To stress the point, in PT the key cell could fire for any patom related to Aniston. She is female, so that cell would fire. She has blond hair, so that cell would fire. She acted in Friends, so that cell would fire.

In another multi-modal example including an auditory sign and visual input, Halle Berry could activate a cell with both images and her name. The cell:

‘would fire not only for images of Halle Berry, but also to the actual name "Halle Berry"’

In this case, as both images of someone and their name are a part of the same patom, either recognition will activate the entire patom.

Summary

A theory of brains is needed to (a) help neuroscience to better explain what is going on to help patients and (b) to help AI to design machines that are more capable of emulating biological animals like us!

Instead of focusing on the processing power of neurons, PT changes the model to focus on brain regions where regions are the storage locations in brains. Linking these regions enables complex motions and sensory recognition: the building blocks for all human capabilities.

While searching for the processing tricks used by neurons continues, no consistent explanation of human brain function has arrived since digital computers arrived, until Patom theory. The adoption of patoms as the patterns stored inside a brain region explains much and enables experimentation to verify or falsify the model.

Science can progress better with a working hypothesis. “We don’t know how a brain works” isn’t useful. PT is useful even though it only explains what the brain does, rather than how it does it.

A neuron ‘firing’ means it becomes active after hitting its threshold of positive inputs less negative inputs. It means signaling in PT after a match.

John, thanks for this post. It clarifies your Patom theory and Role & Reference Grammar, not to mention our own cognitive software architecture rationale. I know that neuroscientist’s will resonate with your explanations, but I suspect that data scientist’s will find the leap from neurons, to concepts, to meaning/language to be troubling.

I look forward to your next article. Dennis L Thomas