Today’s AI is not getting closer to AI’s goals and that’s a problem.

Sure, there’s a lot of activity in AI around LLMs like ChatGPT with outrageous hype claiming human-level AI is approaching, but that’s easy to falsify.

Breathless announcements for improving on artificial benchmarks are no match for science even if there are billions of dollars of investment involved chasing trillions of dollars in value! In science, we can see goals that are stuck despite benchmark successes.

It’s hard to quickly critique the lack of progress in AI, so let me talk through some issues today, and then talk in more detail in the coming weeks. But always keep in mind that AI should aim at human-level skills and not allow current limitations like errors or lack of trust to be accepted or become the goal.

After all, goals can be whatever we want them to be.

So what is the Goal of AI

Strong AI should fix the long tail of driverless car problems using its vision and world knowledge.

But OpenAI has an AGI plan that excludes the hard problems of AI that will leave it blind, deaf, mute and paralysed. For marketing purposes that’s fine, but their AGI is moving the goal posts to avoid solving the problems of AI. Worse, industry, government and educational institutions may blindly follow them due to the scale of their investments.

My point is that there is a big deviation between the goals of AI and OpenAIs definitions that suit their LLMs limitations but not the more sensible AI goals from the past.

Along these lines, the ultimate goal for autonomous cars requires animal-level sensory and motor control, probably at human level to handle emergency situations.

Should it drive off the road into a stationary truck to avoid hitting a child sitting in the road or continue on for personal safety if it were a small animal on the road? Those are quick decisions of which there are many needed for human-like safety.

What about the goals for Human Language on Machine

If machines could speak our language they would be much more useful. Known as Natural Language Understanding or NLU, a number of different approaches have been taken including most recently the ones at my company, Pat Labs.

The Noam Chomsky formal model from the 1950s failed for NLU since it is too ambiguous with its reliance on syntactic units called parts of speech.

Computational linguistics also based on the syntactic model failed from the 1990s to around 2016 for a similar reason - it is too ambiguous even for machine learning to handle.

LLMs have significant problems with their statistical design. The most obvious are its errors in making stuff up.

But for use in other applications like agents, LLMs don’t provide what is asked but instead they generate text as an answer. Without understanding what was asked and then finding what the answer is, strategies to deal with the limitations are additional subsystems. And ultimately lack of backward compatibility with statistical data models are expensive to maintain.

As explained by Thomas G. Dietterich, a pioneer in machine learning, LLMs have a statistical model of a knowledge base and should split knowledge from language.

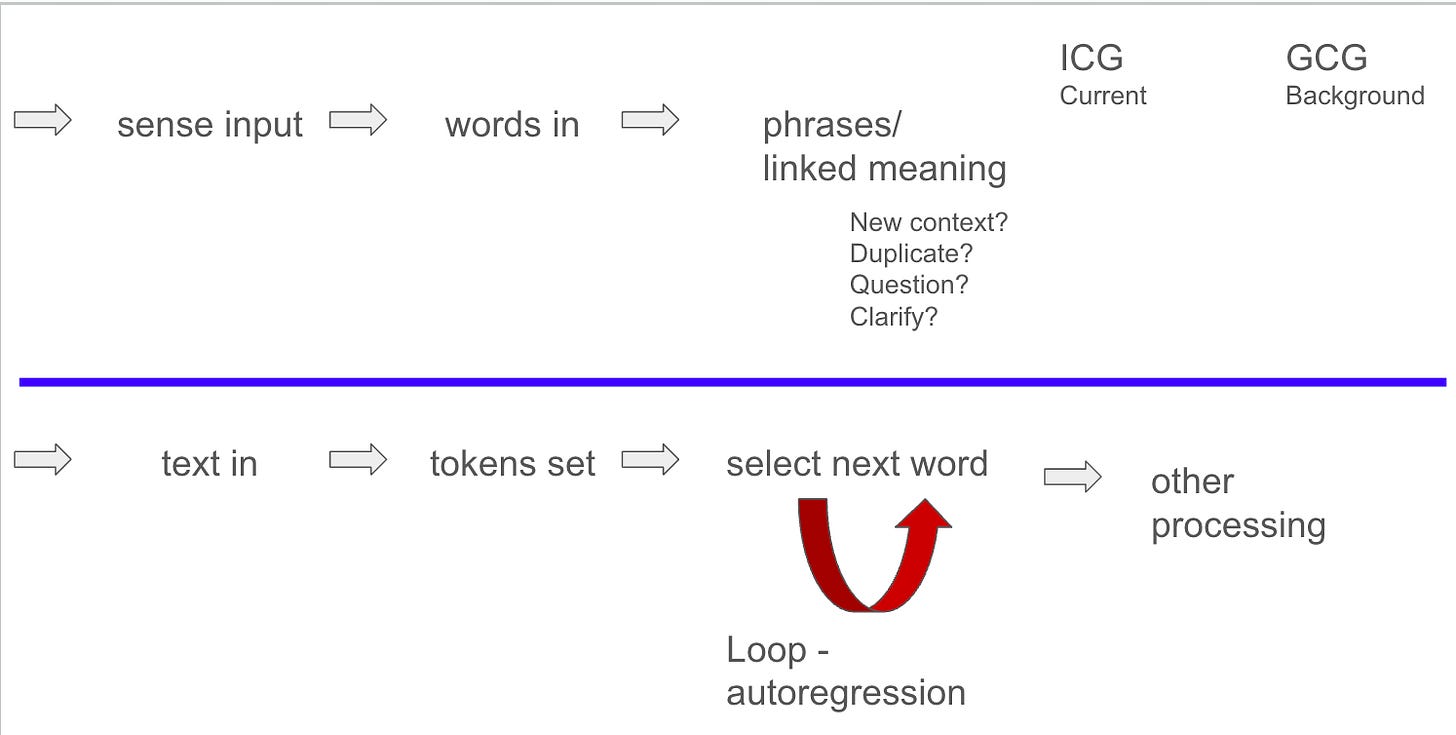

Which leads to the cognitive science model. My brain model, Patom theory or just PT, stores patterns in their edge positions and then links them together. This model allows any connected brain region to serve patterns to subsequent regions as we see from brain damage without encoding them. It removes the need for search in the system since patterns are atomic - you can just follow their links.

In conjunction with Role and Reference Grammar linguistics, or RRG for short, the split between language’s words and phrases and their mapping to meaning in context creates the split between a language and knowledge.

As you can see, the moving parts for human language need words and phrases linking to meaning in context. And context isn’t trivial, nor language specific. This allows any human to integrate senses with meaning as it isn’t language specific.

Systems based on this cognitive science approach promise to cost something like a tenth or less of an LLM while adding testing, backwards compatibility and low power consumption.

The Real Goals of AI

AI holds the key to killer applications of a scale we have never seen before. It is easy to see the trillions of dollars possible through successful robotics and other technology. The goal of agents that can help users in their own language is very compelling and one of the reasons the 2014 bubble for chatbots was so strong - as long as the underlying technology was machine learning from what I could see at the time.

Working AI will enable stalled projects to progress because it can provide the missing pieces currently factored out of today’s compromise systems.

I discuss the killer apps in my recent books, but image human level capability for speech recognition, automatic translation, transcription of language accurately, driverless cars, home robotics, lossless knowledge storage enabling any languages to produce, language-learning robots and general online agents.

Cognitive Science is the next-generation of AI because it will enable stalled projects to be completed. Speech recognition for example is good, but it doesn’t work like a person and so it just an error-prone tool. So too is automatic translation an error prone tool. So too is automatic captioning error prone. So too are LLMs error-prone. So too are conversational agents error prone.

Rather than generating probable text, understand and clarify what is received. Clarification is at the core of communications, not just answering.

By understanding what language is communicating promises to transform today’s AI tools into ubiquitous tools. It’s been to Holy Grail of Silicon Valley for decades leading to today’s impasse.

The Business of AI

The world of investment is keen to see human-level intelligence in machines and the current investment model seems to value LLMs on that path. But LLMs are easily disrupted as we have recently seen with the Chinese company DeepSeek that created an LLM for 5% of the cost of its American competitors.

Cloud hosting providers have been the big winners in the recent AI race, even leading the US government to support an initiative to build new datacentres to deal with the excessive power and datacenter needs of LLMs.

But just as an announcement from DeepSeek can devalue the speculations on the LLM form of AI, the real competitor remains cognitive science implementations such as the ones we developed at Pat Labs.

Rather than needing billions of dollars of new datacenters and power sources, new systems of cognitive science based AI could run on laptops and cloud servers lie other modern applications. Just as the human brain uses about 12 watts of power, so too can we use science to drop the cost of AI towards those levels with more accuracy.

Why the Next Generation of AI will win

The evidence against LLMs being a long-term proposition have been clear for some time.

Simply in terms of cost, without a monopoly and with lack of trust of their output, if a trustworthy alternative arrives, why persevere?

Just following Professor Dietterich’s suggestion, let’s say you are Amazon. To interact with users who want to buy their products, why should they pay for the knowledge of molecular biology, space travel, theoretical dance, and other topics in order to use language to find, explain and sell products? And without context, how can conversations be controlled?

I’ll explore these questions more in upcoming videos.

Do you want to get more involved?

If you want to get involved with our upcoming project to enable a gamified language-learning system, the site to track progress is here (click). You can keep informed by adding your email to the contact list on that site.

Do you want to read more?

If you want to read about the application of brain science to the problems of AI, you can read my latest book, “How to Solve AI with Our Brain: The Final Frontier in Science” to explain the facets of brain science we can apply and why the best analogy today is the brain as a pattern-matcher. The book link is here on Amazon in the US.

In the cover design below, you can see the human brain incorporating its senses, such as the eyes. The brain’s use is being applied to a human-like robot who is being improved with brain science towards full human emulation in looks and capability.